On Random Linear Network Coding for Butterfly Network PDF

Preview On Random Linear Network Coding for Butterfly Network

1 On Random Linear Network Coding for Butterfly Network Xuan Guang, and Fang-Wei Fu Abstract—Randomlinearnetworkcodingisafeasibleencoding tool for network coding, specially for the non-coherent network, d d and its performance is important in theory and application. In 1 2 0 this letter, we study the performance of random linear network e s e 1 2 1 coding for the well-known butterfly network by analyzing the 0 failure probabilities. We determine the failure probabilities of s1 e e s2 2 random linear network coding for the well-known butterfly net- 4 5 work and the butterflynetwork with channel failureprobability n p. i a J IndexTerms—Networkcoding,randomlinearnetworkcoding, e3 e7 e6 butterfly network, failure probabilities. 8 1 j I. INTRODUCTION e e ] t 8 9 t T IN the seminal paper [1], Ahlswede et al showed that with 1 2 I network coding, the source node can multicast informa- s. tion to all sink nodes at the theoretically maximum rate as Fig. 1. Butterfly Network where d1,d2 represent two imaginary incoming c channels ofsourcenodes. [ the alphabet size approaches infinity, where the theoretically maximum rate is the smallest minimum cut capacity between 2 the source node and any sink node. Li et al [2] showed that v 1.Itisobviousthatbothvaluesofthemaximumflowsforthe linear network coding with finite alphabet is sufficient for 7 sink nodes t and t are 2. 1 2 7 multicast. Moreover, the well-known butterfly network was Now we consider random linear network coding problem 0 proposedin papers [1] [2] to show the advantagesof network with the information rate 2 for this butterfly network G. That 2 coding well compared with routing. Koetter and M´edard [3] is to say, for source node s and internal nodes, all local . 1 presentedanalgebraiccharacterizationofnetworkcoding.Ho encoding coefficients are independently uniformly distributed 0 etal[4] proposedthe randomlinearnetworkcodingandgave randomvariablestakingvaluesinthebasefieldF.Forsource 0 several upper bounds on the failure probabilities of random 1 node s, although it has no incoming channels, we use two linearnetworkcoding.Balli, Yan,andZhang[5]improvedon v: these bounds and discussed the limit behavior of the failure imaginary incoming channels d1,d2 and assume that they provide the source messages for s. Let the global encoding i X probability as the field size goes to infinity. 1 0 r In this paper, we study the failure probabilities of random kernels of fd1 = (cid:18)0(cid:19) and fd2 = (cid:18)1(cid:19), respectively. The a linear network coding for the well-known butterfly network local encoding kernel at the source node s is denoted as when it is considered as a single source multicast network k d K = d1,1 d1,2 . described by Fig. 1, and discuss the limit behaviors of the s (cid:18)kd2,1 dd2,2(cid:19) failure probabilities as the field size goes to infinity. Similarly, we denote by fi the global encoding kernel of channel e (1 ≤ i ≤ 9), and k the local encoding i i,j II. FAILUREPROBABILITIES OFRANDOM LINEAR coefficient for the pair (ei,ej) with head(ei) = tail(ej) as NETWORK CODING FORBUTTERFLY NETWORK described in [6, p.442], where tail(ei) represents the node whose outgoing channels include e , head(e ) represents the In this letter, we always denote G = (V,E) the butterfly i i node whose incoming channels include e : network shown by Fig. 1, where the single source node is i s, the set of sink nodes is T = {t1,t2}, the set of internal f =k f +k f = kd1,1 , nodes is J = {s1,s2,i,j}, and the set of the channels is 1 d1,1 d1 d2,1 d2 (cid:18)kd2,1(cid:19) E = {e ,e ,e ,e ,e ,e ,e ,e ,e }. Moreover, we assume 1 2 3 4 5 6 7 8 9 k that each channel e∈E is error-free, and the capacity is unit f2 =kd1,2fd1 +kd2,2fd2 =(cid:18)kdd21,,22(cid:19), This research is supported in part by the National Natural Science Foun- k k k k datXio.nGoufanCghiinsawuinthdetrhteheChGerranntIsns6t0it8u7te20o2f5Manadthe1m09a9ti0c0s1,1N.ankai University, f3 =k1,3f1 =(cid:18)kdd21,,11k11,,33(cid:19),f4 =k1,4f1 =(cid:18)kdd21,,11k11,,44(cid:19), Tianjin300071,P.R.China. Email:xuanguang@mail.nankai.edu.cn k k k k F.-W. Fu is with the Chern Institute of Mathematics and LPMC, Nankai f =k f = d1,2 2,5 ,f =k f = d1,2 2,6 , University, Tianjin300071,P.R.China.Email:fwfu@nankai.edu.cn 5 2,5 2 (cid:18)kd2,2k2,5(cid:19) 6 2,6 2 (cid:18)kd2,2k2,6(cid:19) 2 k k k +k k k f7 =k4,7f4+k5,7f5 =(cid:18)kdd21,,11k11,,44k44,,77+kdd21,,22k22,,55k55,,77(cid:19), KThseBn2,.wMeokrenoovwerf,rformom(1L)eamnmda(21), wtheathFavt1e = KsB1, Ft2 = k k k k +k k k k f8 =k7,8f7 =(cid:18)kdd12,,11k11,,44k44,,77k77,,88+kdd12,,22k22,,55k55,,77k77,,88(cid:19), 1−Pe(t1)==PPrr(({Rdaentk(K(Ft)1)6==0}2)∩={dPert((Bdet)(F6=t10)}6=) 0) s 1 f =k f = kd1,1k1,4k4,7k7,9+kd1,2k2,5k5,7k7,9 =Pr(det(Ks)6=0)·Pr(det(B1)6=0) 9 7,9 7 (cid:18)kd2,1k1,4k4,7k7,9+kd2,2k2,5k5,7k7,9(cid:19) (|F|+1)(|F|−1)2 |F|−1 4 and the decoding matrices of the sink nodes t1,t2 are = |F|3 (cid:18) |F| (cid:19) k k k k k k +k k k k (|F|+1)(|F|−1)6 Ft1 =(cid:18)kdd12,,11k11,,33 kdd12,,11k11,,44k44,,77k77,,88+kdd12,,22k22,,55k55,,77k77,,88(cid:19), = |F|7 . (1) Similarly,wealsohave1−P (t )=(|F|+1)(|F|−1)6/|F|7. k k k k k k +k k k k e 2 Ft2 =(cid:18)kdd12,,22k22,,66 kdd12,,11k11,,44k44,,77k77,,99+kdd12,,22k22,,55k55,,77k77,,99(cid:19). aboNveexta,nwaleyscios,nswideehratvhee failure probability Pe. Similar to the (2) 1−P =Pr({Rank(F )=2}∩{Rank(F )=2}) e t1 t2 A. Butterfly Network without Fail Channels =Pr({det(F )6=0}∩{det(F )6=0}) t1 t2 Next,webegintodiscussthefailureprobabilitiesofrandom =Pr({det(K )6=0}∩{det(B )6=0}∩{det(B )6=0}) s 1 2 linear network coding for the butterfly network G. These =Pr(det(K )6=0)·Pr(det(B )6=0)·Pr(det(B )6=0) failure probabilities can illustrate the performance of the s 1 2 (|F|+1)(|F|−1)10 randomlinear networkcoding for the butterfly network.First, = . we give the definitions of failure probabilities. |F|11 Definition 1: For random linear network coding for the Moreover, since P = Pe(t1)+Pe(t2), then we have butterfly network G with information rate 2: av 2 • pPreo(btia)bil,ityoPfrs(iRnkannko(dFettii),th<ati2s)thiespcraolbleadbiltihtyethfaaitluthree Pav =1− (|F|+1|F)(||F7 |−1)6 . messages cannot be decoded correctly at t (i=1,2); i • Pe ,Pr(∃ t∈T such thatRank(Ft)<2) is called the This completes the proof. failure probability of the butterfly network G, that is the It is known that if |F|≥|T|, there exists a linear network probabilitythatthemessagescannotbedecodedcorrectly code C such that all sink nodes can decode successfully w= at at least one sink node in T; mint∈T Ct symbolsgeneratedbythesourcenodes,whereCt • Pav , Pt∈|TTP|e(t) iscalledtheaveragefailureprobability is the minimum cut capacity between s and the sink node t. of all sink nodes for the butterfly network G. For butterfly network, |F|≥2 is enough. However, when we consider the performance of random linear network coding, Todeterminethefailureprobabilitiesofrandomlinearnetwork the result is different. coding for the butterfly network G, we need the following For|F|=2,wehave1−P = 3 ≈0.001.Inotherwords, lemma. e 211 this successful probability is too much low for application. Lemma 1: Let M be a uniformly distributed random 2×2 In the same way, for |F| = 3 and |F| = 4, the successful matrix over a finite field F, then the probability that M is invertible is (|F|+1)(|F|−1)2/|F|3. probabilitiesare approximately0.023 and 0.070, respectively. Thesesuccessfulprobabilitiesarestilltoolowforapplication. Theorem 1: For random linear network coding for the but- terfly network G with information rate 2: If 1−Pe ≥ 0.9 is thought of enough for application, we have to choose a very large base field F with cardinality not • the failure probability of the sink node ti (i=1,2) is less than 87. This is because (|F|+1)(|F|−1)6 P (t )=1− ; (|F|+1)(|F|−1)10 e i |F|7 ≥0.9⇐⇒|F|≥87 . |F|11 • the failure probability of the butterfly network G is (|F|+1)(|F|−1)10 B. Butterfly Network with Fail Channels P =1− ; e |F|11 In this subsection, as in [4], we will consider the failure • the average failure probability is probabilitiesofrandomlinearnetworkcodingforthebutterfly (|F|+1)(|F|−1)6 network with channel failure probability p (i.e., each channel Pav =1− |F|7 . is possibly deleted from the network with probability p). Generallyspeaking,channelfailureis alowprobabilityevent, Proof: Recall that Ks = (f1 f2) is the local encoding i.e.,0≤p≪ 1.Infact,ifp=0,itisjustthebutterflynetwork 2 kernel at the source node s. Denote without fail channels discussed in the above subsection. k k k k 0 k k k Theorem 2: For the random linear network coding of the B , 1,3 1,4 4,7 7,8 ,B , 1,4 4,7 7,9 . 1 (cid:18) 0 k2,5k5,7k7,8(cid:19) 2 (cid:18)k2,6 k2,5k5,7k7,9(cid:19) butterfly network G with channel failure probability p, 3 =Pr({Rank((f f ))=2}∩{δ ,δ ,δ ,δ ,δ ,δ ,δ ,δ ,δ } • the failure probability of the sink node ti (i=1,2) is 1 2 1 2 3 4 5 6 7 8 9 ∩{k ,k ,k ,k ,k ,k ,k ,k 6=0}) 1,3 2,5 5,7 7,8 2,6 1,4 4,7 7,9 (|F|+1)(|F|−1)6 P˜e(ti)=1− |F|7 (1−p)6 ; =Pr(Rank((f1 f2))=2)·Pr(δ1,δ2,δ3,δ4,δ5,δ6,δ7,δ8,δ9) ·Pr({k ,k ,k ,k ,k ,k ,k ,k 6=0}) 1,3 2,5 5,7 7,8 2,6 1,4 4,7 7,9 • the failure probability of the butterfly network G is (|F|+1)(|F|−1)10 = (1−p)9. (|F|+1)(|F|−1)10 |F|11 P˜ =1− (1−p)9 ; e |F|11 At last, the average probability P˜ is given by av • the average failure probability is P˜ (t )+P˜ (t ) (|F|+1)(|F|−1)6 P˜ =1− (|F|+1)(|F|−1)6(1−p)6 . P˜av = e 1 2 e 2 =1− |F|7 (1−p)6 . av |F|7 The proof is completed. Proof:Atfirst,weconsiderthefailureprobabilityP˜ (t ). e 1 Foreachchannele ,we call“channele issuccessful”if e is III. THELIMIT BEHAVIORS OF THEFAILURE i i i not deleted from the network, and define δ as the event that PROBABILITIES i “ei is successful”. We define f˜i as the active globalencoding In this section, we will discuss the limit behaviors of the kernel of ei, where failure probabilities. From Theorem 2, we have f˜ = fi, ei is successful, lim P˜e(ti)= lim P˜av =1−(1−p)6 ,(i=1,2) , i (cid:26) 0 , otherwise. |F|→∞ |F|→∞ lim P˜ =1−(1−p)9 . e andf = k f˜.Thenthedecodingmatrix |F|→∞ i j:head(ej)=tail(ei) j,i j of the sinPk node t is F = (f˜ f˜). Hence, P˜ (t ) = Specially, if we consider the random linear network coding 1 t1 3 8 e 1 Pr(Rank((f˜ f˜))6=2). for the butterfly network without fail channels, i.e. p=0, we 3 8 Note that the event “Rank((f˜ f˜)) = 2” is equivalent to have (also from Theorem 1) 3 8 the event “Rank((f f )) = 2, δ , and δ ”. Moreover, since 3 8 3 8 lim P (t )= lim P = lim P =0 , (i=1,2). (f3 f8) = (k1,3f˜1 k7,8f˜7), we have Rank((f3 f8)) = 2 if |F|→∞ e i |F|→∞ e |F|→∞ av and only if Rank((f˜1 f˜7)) = 2 and k1,3 6= 0,k7,8 6= 0. That is, for the sufficient large base field F, the failure Similarly, the event “Rank((f˜1 f˜7)) = 2” is equivalent probabilities can become arbitrary small. In fact, this result to the event “Rank((f1 f7)) = 2, δ1, and δ7”. Note that is correct for all single source multicast network coding [4]. f˜4 = 0 or f4, and f4 = k1,4f˜1, so f˜4 = 0 or k1,4f1. Since It is easy to see from Theorem 1 that the rates of Pe(ti) iff7R=ankk4,(7(ff˜41+f˜5k)5),7=f˜5,2waendhakv5e,7R6=an0k.(G(fo1infg7)o)n=w2ithiftahnedsoanmlye oapfpProaacphpinrogac0hianngd0Piasv a9p.proaching 0 are |F5|, and the rate analysisasabove,wehavethattheevent“Rank((f f˜))=2” e |F| 1 5 is equivalent to the event “Rank((f f )) = 2, and δ ”, and 1 5 5 IV. CONCLUSION the event “Rank((f f )) = 2” is equivalent to the event “Rank((f f )) = 21, k5 6= 0, and δ ” since f = k f˜. The performance of random linear network coding is illus- Therefore,1th2e event “R2a,5nk((f˜ f˜)) =2 2” is eq5uivale2n,5t t2o trated by the failure probabilities of random linear network 3 8 coding. In general, it is very difficult to calculate the failure the event “Rank((f f )) = 2, δ , δ , δ , δ , δ , δ , and 1 2 1 2 3 5 7 8 probabilities of random linear network coding for a general k ,k ,k ,k 6=0”. Hence, we have 1,3 2,5 5,7 7,8 communicationnetwork.Inthisletter,wedeterminethefailure 1−P˜ (t )=Pr(Rank((f˜ f˜))=2) probabilities of random linear network coding for the well- e 1 3 8 knownbutterfly network and the butterfly network with chan- =Pr({Rank(f f )=2}∩{δ ,δ ,δ ,δ ,δ ,δ } 1 2 1 2 3 5 7 8 nel failure probability p. ∩{k ,k ,k ,k 6=0}) 1,3 2,5 5,7 7,8 =Pr({Rank(f1 f2)=2})·Pr({δ1,δ2,δ3,δ5,δ7,δ8}) REFERENCES ·Pr({k ,k ,k ,k 6=0}) 1,3 2,5 5,7 7,8 [1] R.Ahlswede,N.Cai,S.-Y.R.Li,andR.W.Yeung,“NetworkInformation (|F|+1)(|F|−1)6 Flow,”IEEETransactionsonInformationTheory,vol.46,no.4,pp.1204- = (1−p)6. 1216,Jul.2000. |F|7 [2] S.-Y.R. Li, R. W.Yeung, andN. Cai, “Linear Network Coding,” IEEE Transactions on Information Theory, vol. 49, no. 2, pp. 371-381, Jul. In the same way, we can get 2003. [3] R.Koetter,andM.M´edard,“AnAlgebraicApproachtoNetworkCoding,” (|F|+1)(|F|−1)6 P˜ (t )=P˜ (t )=1− (1−p)6 . IEEE/ACMTransactionsonNetworking,vol.11,no.5,pp.782-795,Oct. e 2 e 1 |F|7 2003. [4] T. Ho, R. Koetter, M. M´edard, M. Effors, J. Shi, and D. Karger, “A For the failure probability P˜ , with the same analysis as RandomNetworkCodingApproachtoMulticast,”IEEETransactionson e InformationTheory,vol.52,no.10,pp.4413-4430, Jul.2006. above, we have [5] H. Balli, X. Yan, and Z. Zhang, “On Randomized Linear Network 1−P˜ =Pr({Rank(F )=2}∩{Rank(F )=2}) Codes and Their Error Correction Capabilities,” IEEE Transactions on e t1 t2 InformationTheory,vol.55,no.7,pp.3148-3160, Jul.2009. =Pr({Rank((f˜ f˜))=2}∩{Rank((f˜ f˜))=2}) [6] R.W.Yeung,“InformationTheoryandNetworkCoding,”Springer2008. 3 8 6 9

The list of books you might like

Mind Management, Not Time Management

The 5 Second Rule: Transform your Life, Work, and Confidence with Everyday Courage

Shatter Me Complete Collection (Shatter Me; Destroy Me; Unravel Me; Fracture Me; Ignite Me)

As Good as Dead

C. Indice de autores

Opportunities in fashion careers

On the Re-erection of the Tribe Stenoscelideini SCHAEFER (Heteroptera, Coreidae, Coreinae)

Zur Synonymie westpaläarktischer Miriden (Heteroptera)

Gonycentrum heissi nov.sp. (Hemiptera, Heteroptera, Tingidae) from Madagascar

Heissocteus ernsti nov.gen. et nov.sp. (Heteroptera, Cydnidae) from Zambia

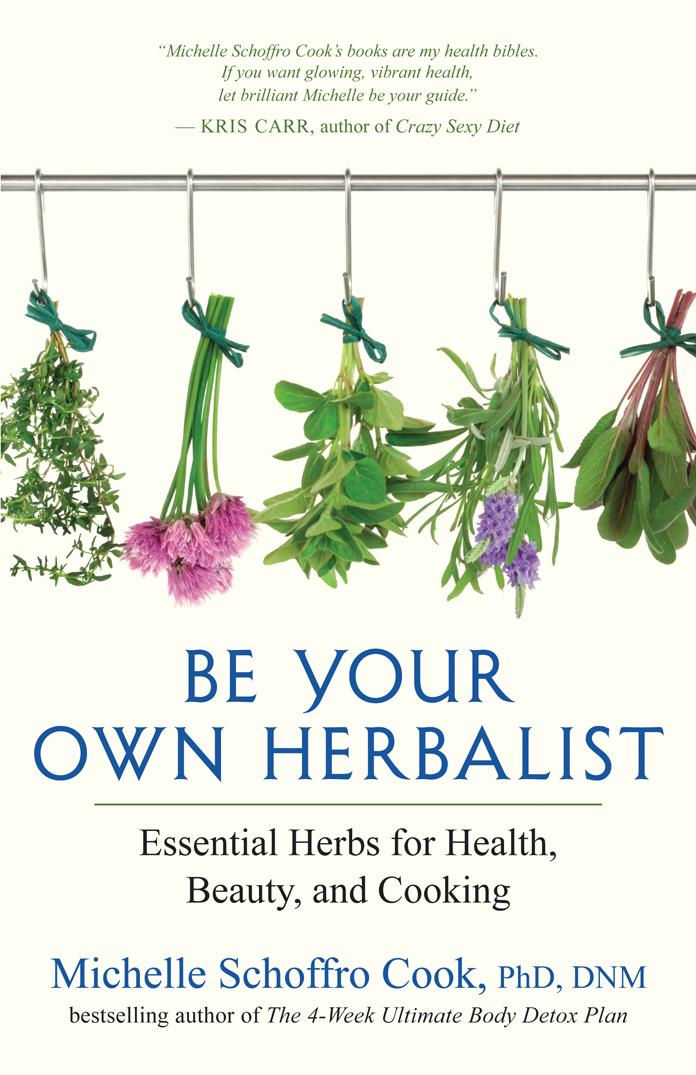

Be Your Own Herbalist

Ballad of the Lost Hare by Margaret Sidney

Contractivity of positive and trace preserving maps under $L_p$ norms

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE DEVELOPING MINDFULNESS SKILLS OF MFT ...

CALIFORNIA STATE UNIVERSITY NORTHRIDGE Are College-Educated Minorities Also ...

CALIFORNIA STATE UNIVERSITY NORTHRIDGE MICROWAVE RECEIVER AMPLIFIER AND ...

California State Board of Pharmacy

b.a.ll.b.,b.b.a.ll.b._ll.b. syllabus_12.032018

Tomaszewski - Healing Patterns Of The Hebrew

Caracterización del sector de la aceituna de mesa en Andalucía

DTIC ADA465183: Comparing Java and .NET Security: Lessons Learned and Missed

Psycho Vox