Stochastic Approximation and Optimization of Random Systems PDF

Preview Stochastic Approximation and Optimization of Random Systems

DMVSeminar Hand 17 Springer Basel AG Lennart Ljung GeorgPflug HarroWalk Stochastic Approximation and Optimization of Random Systems Springer Basel AG Authors' addresses: L. Ljung H. Walk Linkoping University Universităt Stuttgart Department of Electrical Engineering Mathematisches Institut A S-581 83 Linkoping Pfaffenwaldring 57 Sweden D-7000 Stuttgart 80 Germany G. Pflug Universităt Wien Institut fiir Statistik und Informatik Universitătsstrasse 5 A-I010Wien Austria Deutsche Bibliothek Cataloging-in-Publication Data Stochastic approximation and optimization of random systems / Lennart Ljung; Georg Pflug; Harro Walk. - Basel ; Boston ; Berlin : Birkhăuser, 1992 (DMV-Seminar ; Bd.17) ISBN 978-3-7643-2733-0 ISBN 978-3-0348-8609-3 (eBook) DOI 10.1007/978-3-0348-8609-3 NE: Ljung, Lennart; Pflug, Georg; Walk, Harra; Deutsche Mathematiker-Vereinigung: DMV-Seminar This work is subject to copyright. All rights are reserved, whether the whole or part of the material is concemed, specifically those of translation, reprinting, re-use of illustrations, broadcasting, reproduction by photocopying machine or similar means, and storage in data banks. Under § 54 of the German Copyright Law where copies are made for other than private use a fee is payable to >Nerwertungsgesellschaft Wort«, Munich. © 1992 Springer Basel AG Originally published by Birkhăuser Verlag Basel in 1992 Printed on acid-free paper, directly from the authors' camera-ready manuscripts ISBN 978-3-7643-2733-0 Preface The DMV seminar "Stochastische Approximation und Optimierung zufalliger Systeme" was held at Blaubeuren, 28.5.-4.6.1989. The goal was to give an approach to theory and application of stochas tic approximation in view of optimization problems, especially in engineering systems. These notes are based on the seminar lectures. They consist of three parts: I. Foundations of stochastic approximation (H. Walk); n. Applicational aspects of stochastic approximation (G. PHug); In. Applications to adaptation :ugorithms (L. Ljung). The prerequisites for reading this book are basic knowledge in probability, mathematical statistics, optimization. We would like to thank Prof. M. Barner and Prof. G. Fischer for the or ganization of the seminar. We also thank the participants for their cooperation and our assistants and secretaries for typing the manuscript. November 1991 L. Ljung, G. PHug, H. Walk Table of contents I Foundations of stochastic approximation (H.Walk) §1 Almost sure convergence of stochastic approximation procedures 2 §2 Recursive methods for linear problems 17 §3 Stochastic optimization under stochastic constraints 22 §4 A learning model; recursive density estimation 27 §5 Invariance principles in stochastic approximation 30 §6 On the theory of large deviations 43 References for Part I 45 11 Applicational aspects of stochastic approximation (G. PHug) §7 Markovian stochastic optimization and stochastic approximation procedures 53 §8 Asymptotic distributions 71 §9 Stopping times 79 §1O Applications of stochastic approximation methods 80 References for Part II 90 III Applications to adaptation algorithms (L. Ljung) §1l Adaptation and tracking 95 §12 Algorithm development 96 §13 Asymptotic Properties in the decreasing gain case 104 §14 Estimation of the tracking ability of the algorithms 105 References for Part III 113 I.1 1 I Foundations of stochastic approximation Harro Walk University of Stuttgart Mathematisches Institut A Pfaffenwaldring 57 D-7000 Stuttgart 80 Federal Republic of Germany Stochastic approximation or stochastic iteration concerns recursive estimation of quantities in connection with noise contaminated observations. Historical starting points are the papers of Robbins and Monro (1951) and of Kiefer and Wolfowitz (1952) on recursive estimation of zero and extremal points, resp., of regression functions, i.e. of functions whose values can be observed with zero expectation errors. The Kiefer-Wolfowitz method is a stochastic gradient algorithm and may be described by the following example. A mixture of metals with variable mixing ratio is smelt at a fixed temperature. The hardness of the alloy depends on the mixing ratio characterized by an x E IRk, but is subject to random fluc tuations; let F(x) be the expected hardness. The goal is a recursive estimation of a maximal point of F. One starts with a mixing ratio characterized by Xl in IRk. Let Xn characterize the mixing ratio in the nth step; for the neighbour points Xn ± cnel (l = 1, ... , k) with 0 < Cn ~ 0 and unit vector el (with 1 as l-th coordinate) one obtains the random hardnesses Y~l and Y~l' resp. The mixing ratio in the next step is chosen as X . = X +.£ ( Yn' l - Yn"l ) n+l . n n 2C n l=l, ... ,k with c > o. If F is totally differentiable with (Frechet) derivative DF, the recursion can be formally written as with random vectors Xn, Hn, Vn, where -Hn can be considered as a systematic error at using divided differences instead of differential quotients and - Vn as a stochastic error with Under certain assumptions on F and the errors, (Xn) almost surely (a.s.) converges to a maximal point of F. For recursive estimation of a minimal point one uses the above formula with -c/n instead of c/n . The recursion is inspired by the gradient method in deterministic optimization. In contrast to most of the deterministic iterative procedures, the correction terms in stochastic approximation procedures which 2 Foundations of stochastic approximation are influenced by random effects, are provided with damping factors c/n or more generally deterministic or random weights (gains) an with 0 < an 0 -t (usually) and 2: an = 00. § 1 Almost sure convergence of stochastic approximation procedures Results on almost sure convergence of stochastic approximation processes are often proved by a separation of deterministic (pathwise) and stochastic con siderations. The basic idea is to show that a "distance" between estimate and solution itself has the tendency to become smaller. The so-called first Lyapunov method of investigation does not use knowledge of a solution. Thus in deter ministic numerical analysis gradient and Newton procedures for minimizing or maximizing F by a recursive sequence (Xn) are investigated by a Taylor ex pansion of F(Xn+d around Xn - a device, which has been used in stochastic approximation for the first time by Blum (1954) and later by Kushner (1972) and Nevel'son and Has'minskii (1973/76, pp. 102 - 106). The second Lyapunov method in its deterministic and stochastic version employs knowledge of a solution. The stochastic consideration often uses "almost supermartingales" (Lemma 1.10). The following Lemma 1.1 (compare Henze 1966, Pakes 1982) concerns linear recursions and weighted means. Theorems 1.2, 1.7, 1.8 below are purely deterministic and can immediately be formulated in a stochastic a.s. version. In view of applications concerning estimation of functions as elements of a real Hilbert space 1H or a real Banach space 18 the domain of F is often chosen as H or 18. 1.1. Lemma. Let be an E [0,1) (n E 1N), f3n := [(I-an) ... (l-a1))-1, 'Yn := anf3n. Then a) f3n = 1 + 'Y1 + ... + 'Yn, b) 2: an = 00 ~ f3n i 00, c) for elements Xn, Wn in a linear space the representations and n L Xn+1 = 13;;1 'Yk Wk (n E 1N) k=l are equivalent. In the special case an = 1/(n + 1) one has f3n = n + 1, 'Yn = 1. 1.2. Theorem. Let F : H -t 1R be bounded from below and have a Frechet derivative DF. Assume Xn, Wn, Hn, Vn EH (n E 1N) with Xn+1 = Xn - an(DF(Xn) - Wn), Wn = Hn + Vn, I.1 Almost sure convergence of stochastic approximation procedures 3 where an E [0, 1), an -> 0 (n -> (0), L: an = 00. Let /3n, 'Yn be defined according to Lemma 1.1. a) Assume (1) DF E Lip, i.e. 3 V IIDF(x') - DF(x")11 s Kllx' - x"ll, KEIR+ x',xI/ElH (2') n L (2") /3;;1 'Yk Vk -> 0, k=l n (2'" ) Lan+1II/3;;l L'YkVk112 < 00. k=l Then (F(Xn)) is convergent, L: anIIDF(Xn)112 < 00, DF(Xn) -> 0 (n -> (0). b) Assume (3) DF uniformly continuous, (4) lim IIDF(x)11 > 0 or 3 lim F(x) E JR, Ilxll->oo Ilxll->oo (5) {F(x); x E 1H with DF(x) = O} nowhere dense in JR, (6) V {F(x); x E 1H with Ilxll s Rand IIDF(x)11 s b} ! R>O {F(x); x E 1H with Ilxll s Rand DF(x) = O} (15 -> 0), n L (7) /3;;1 'Yk Wk -> O. k=l Then (F(Xn)) is convergent, DF(Xn) -> 0 (n -> (0). PROOF OF THEOREM 1.2a: One uses the first Lyapunov method. Motivated by partial summation, with notations of Lemma 1.1, one has an Vn = Zn + an Vn with n-1 Vn = /3;;21 L: 'Yk Vk -> 0 according to (2"), k=l 4 Foundations of stochastic approximation Zn = Vn+1 - Vn. Thus Xn+1 = Xn - anDF(Xn) + Zn + an Vn + anHn· Setting Xf:= Xl, X~+l := X~ - anDF(Xn) + an Vn + anHn, one obtains X~+l - Xn+1 = -(Zl + ... + Zn) = - Vn+1 -+ 0, X~+l = X~ - anDF(X~) + anhn with hn = DF(X~) - DF(Xn) + Vn + Hn, L anllhnl12 < 00 because of (1), (2111), (2'). Therefore and because of the Lipschitz condition on DF and (2111) it suffices to prove the assertions for the special case Vn = O. From the recursion formula one obtains F(Xn+d - F(Xn) = -anIIDF(Xn)112 + an(DF(Xn), Hn) I +an J(DF(Xn + tan[-DF(Xn) + HnD - DF(Xn), -DF(Xn) + Hn)dt o and, by the Lipschitz condition on F, F(Xn+1) :::; F(Xn) - ianIIDF(Xn)112 + anllHnl12 for sufficiently large n. Now (2') yields convergence of (F(Xn)). Further, be cause F is bounded from below, one obtains convergence of L an 11 D F (Xn) 112 . For DF(Xn) -+ 0 an indirect proof is given. Assume existence of an c > 0 with IIDF(Xn)11 2: c for infinitely many n. Then, because of (2') and the foregoing result, an N exists with IIDF(XN )11 2: c, f f akIIDF(Xk)112 :::; l~~' akllHkl12 :::; l~~ , k=N k=N By induction, IIDF(Xk)11 2: c/2 for all k 2: N will be shown, which is, because of Lan = 00, in contrast to the foregoing result. Let IIDF(Xk)11 2: c/2 for k = N, ... ,n. The recursion formula yields n n Xn+l = XN - L akDF(Xk) + L akHk, k=N k=N thus n n L L IIXn+1 - XNII < ak\\DF(Xk)\\ + ak\IHk\\XlIIHkll~e/2] k=N k=N n L + ak\\Hkl\XlIIHkll>e/2] k=N

Description:The list of books you might like

A Thousand Boy Kisses

As Good as Dead

Credence

The 48 Laws of Power

ABB. Руководство пользователя преобразователей частоты типа ACS 140

ESAPRO 3D PIPING

Sanskritik Binimay Aru Sanhati

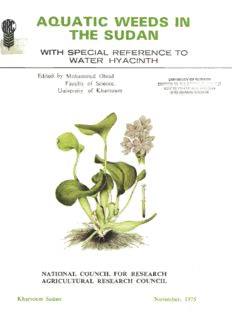

aquatic weeds in the sudan

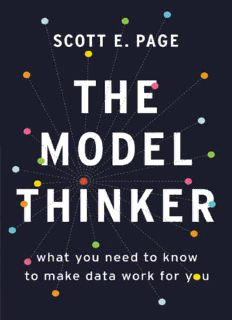

The Model Thinker: What You Need to Know to Make Data Work for You

Éléments essentiels du programme d'adaptation scolaire destiné aux élèves aveugles ou ayant des déficiences visuelles

Greek Government Gazette: Part 4, 2006 no. 114

NATYACHANVAR

Greek Government Gazette: Part 3, 2006 no. 365

Scientific American July 3 1880

Piers Ploughman II

Non-classicality of molecular vibrations activating electronic dynamics at room temperature

Morphometric variation in two populations of the cactus mouse (Peromyscus eremicus) from Trans-Pecos Texas

Bakke: Defining the Strict Scrutiny Test for Affirmative Action Policies Aimed at Achieving Diversity

Greek Government Gazette: Part 2, 2006 no. 1946

Weapon injury report

The American Revolution and The Boer War by Sydney G Fisher

Greek Government Gazette: Part 2, 2006 no. 1749