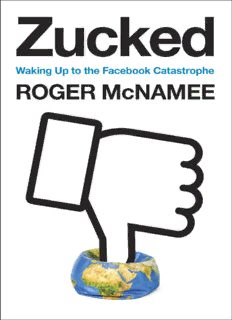

Zucked : waking up to the facebook catastrophe PDF

Preview Zucked : waking up to the facebook catastrophe

Also by Roger McNamee The New Normal The Moonalice Legend: Posters and Words, Volumes 1–9 PENGUIN PRESS An imprint of Penguin Random House LLC penguinrandomhouse.com Copyright © 2019 by Roger McNamee Penguin supports copyright. Copyright fuels creativity, encourages diverse voices, promotes free speech, and creates a vibrant culture. Thank you for buying an authorized edition of this book and for complying with copyright laws by not reproducing, scanning, or distributing any part of it in any form without permission. You are supporting writers and allowing Penguin to continue to publish books for every reader. “The Current Moment in History,” remarks by George Soros delivered at the World Economic Forum meeting, Davos, Switzerland, January 25, 2018. Reprinted by permission of George Soros. LIBRARY OF CONGRESS CATALOGING-IN-PUBLICATION DATA Names: McNamee, Roger, author. Title: Zucked : waking up to the facebook catastrophe / Roger McNamee. Description: New York : Penguin Press, 2019. | Includes bibliographical references and index. Identifiers: LCCN 2018048578 (print) | LCCN 2018051479 (ebook) | ISBN 9780525561361 (ebook) | ISBN 9780525561354 (hardcover) | ISBN 9781984877895 (export) Subjects: LCSH: Facebook (Electronic resource)—Social aspects. | Online social networks—Political aspects—United States. | Disinformation—United States. | Propaganda—Technological innovations. | Zuckerberg, Mark, 1984– —Influence. | United States—Politics and government. Classification: LCC HM743.F33 (ebook) | LCC HM743.F33 M347 2019 (print) | DDC 302.30285—dc23 LC record available at https://lccn.loc.gov/2018048578 While the author has made every effort to provide accurate telephone numbers, internet addresses, and other contact information at the time of publication, neither the publisher nor the author assumes any responsibility for errors or for changes that occur after publication. Further, the publisher does not have any control over and does not assume any responsibility for author or third-party websites or their content. Version_1 To Ann, who inspires me every day Technology is neither good nor bad; nor is it neutral. —Melvin Kranzberg’s First Law of Technology We cannot solve our problems with the same thinking we used when we created them. —Albert Einstein Ultimately, what the tech industry really cares about is ushering in the future, but it conflates technological progress with societal progress. —Jenna Wortham CONTENTS Also by Roger McNamee Title Page Copyright Dedication Epigraph Prologue 1 The Strangest Meeting Ever 2 Silicon Valley Before Facebook 3 Move Fast and Break Things 4 The Children of Fogg 5 Mr. Harris and Mr. McNamee Go to Washington 6 Congress Gets Serious 7 The Facebook Way 8 Facebook Digs in Its Heels 9 The Pollster 10 Cambridge Analytica Changes Everything 11 Days of Reckoning 12 Success? 13 The Future of Society 14 The Future of You Epilogue Acknowledgments Appendix 1: Memo to Zuck and Sheryl: Draft Op-Ed for Recode Appendix 2: George Soros’s Davos Remarks: “The Current Moment in History” Bibliographic Essay Index About the Author Prologue Technology is a useful servant but a dangerous master. —CHRISTIAN LOUS LANGE November 9, 2016 “The Russians used Facebook to tip the election!” So began my side of a conversation the day after the presidential election. I was speaking with Dan Rose, the head of media partnerships at Facebook. If Rose was taken aback by how furious I was, he hid it well. Let me back up. I am a longtime tech investor and evangelist. Tech had been my career and my passion, but by 2016, I was backing away from full-time professional investing and contemplating retirement. I had been an early advisor to Facebook founder Mark Zuckerberg—Zuck, to many colleagues and friends —and an early investor in Facebook. I had been a true believer for a decade. Even at this writing, I still own shares in Facebook. In terms of my own narrow self-interest, I had no reason to bite Facebook’s hand. It would never have occurred to me to be an anti-Facebook activist. I was more like Jimmy Stewart in Hitchcock’s Rear Window. He is minding his own business, checking out the view from his living room, when he sees what looks like a crime in progress, and then he has to ask himself what he should do. In my case, I had spent a career trying to draw smart conclusions from incomplete information, and one day early in 2016 I started to see things happening on Facebook that did not look right. I started pulling on that thread and uncovered a catastrophe. In the beginning, I assumed that Facebook was a victim and I just wanted to warn my friends. What I learned in the months that followed shocked and disappointed me. I learned that my trust in Facebook had been misplaced. This book is the story of why I became convinced, in spite of myself, that even though Facebook provided a compelling experience for most of its users, it was terrible for America and needed to change or be changed, and what I have tried to do about it. My hope is that the narrative of my own conversion experience will help others understand the threat. Along the way, I will share what I know about the technology that enables internet platforms like Facebook to manipulate attention. I will explain how bad actors exploit the design of Facebook and other platforms to harm and even kill innocent people. How democracy has been undermined because of design choices and business decisions by internet platforms that deny responsibility for the consequences of decisions by internet platforms that deny responsibility for the consequences of their actions. How the culture of these companies causes employees to be indifferent to the negative side effects of their success. At this writing, there is nothing to prevent more of the same. This is a story about trust. Technology platforms, including Facebook and Google, are the beneficiaries of trust and goodwill accumulated over fifty years by earlier generations of technology companies. They have taken advantage of our trust, using sophisticated techniques to prey on the weakest aspects of human psychology, to gather and exploit private data, and to craft business models that do not protect users from harm. Users must now learn to be skeptical about products they love, to change their online behavior, insist that platforms accept responsibility for the impact of their choices, and push policy makers to regulate the platforms to protect the public interest. This is a story about privilege. It reveals how hypersuccessful people can be so focused on their own goals that they forget that others also have rights and privileges. How it is possible for otherwise brilliant people to lose sight of the fact that their users are entitled to self-determination. How success can breed overconfidence to the point of resistance to constructive feedback from friends, much less criticism. How some of the hardest working, most productive people on earth can be so blind to the consequences of their actions that they are willing to put democracy at risk to protect their privilege. This is also a story about power. It describes how even the best of ideas, in the hands of people with good intentions, can still go terribly wrong. Imagine a stew of unregulated capitalism, addictive technology, and authoritarian values, combined with Silicon Valley’s relentlessness and hubris, unleashed on billions of unsuspecting users. I think the day will come, sooner than I could have imagined just two years ago, when the world will recognize that the value users receive from the Facebook-dominated social media/attention economy revolution masked an unmitigated disaster for our democracy, for public health, for personal privacy, and for the economy. It did not have to be that way. It will take a concerted effort to fix it. When historians finish with this corner of history, I suspect that they will cut Facebook some slack about the poor choices that Zuck, Sheryl Sandberg, and their team made as the company grew. I do. Making mistakes is part of life, and growing a startup to global scale is immensely challenging. Where I fault Facebook—and where I believe history will, as well—is for the company’s response to criticism and evidence. They had an opportunity to be the hero in their own story by taking responsibility for their choices and the catastrophic outcomes those choices produced. Instead, Zuck and Sheryl chose another path. This story is still unfolding. I have written this book now to serve as a warning. My goals are to make readers aware of a crisis, help them understand how and why it happened, and suggest a path forward. If I achieve only one thing, I hope it will be to make the reader appreciate that he or she has a role to play in the solution. I hope every reader will embrace the opportunity. It is possible that the worst damage from Facebook and the other internet platforms is behind us, but that is not where the smart money will place its bet. The most likely case is that the technology and business model of Facebook and others will continue to undermine democracy, public health, privacy, and innovation until a countervailing power, in the form of government intervention or user protest, forces change. — TEN DAYS BEFORE the November 2016 election, I had reached out formally to Mark Zuckerberg and Facebook chief operating officer Sheryl Sandberg, two people I considered friends, to share my fear that bad actors were exploiting Facebook’s architecture and business model to inflict harm on innocent people, and that the company was not living up to its potential as a force for good in society. In a two-page memo, I had cited a number of instances of harm, none actually committed by Facebook employees but all enabled by the company’s algorithms, advertising model, automation, culture, and value system. I also cited examples of harm to employees and users that resulted from the company’s culture and priorities. I have included the memo in the appendix. Zuck created Facebook to bring the world together. What I did not know when I met him but would eventually discover was that his idealism was unbuffered by realism or empathy. He seems to have assumed that everyone would view and use Facebook the way he did, not imagining how easily the platform could be exploited to cause harm. He did not believe in data privacy and did everything he could to maximize disclosure and sharing. He operated the company as if every problem could be solved with more or better code. He embraced invasive surveillance, careless sharing of private data, and behavior modification in pursuit of unprecedented scale and influence. Surveillance, the sharing of user data, and behavioral modification are the foundation of Facebook’s success. Users are fuel for Facebook’s growth and, in some cases, the victims of it. When I reached out to Zuck and Sheryl, all I had was a hypothesis that bad actors were using Facebook to cause harm. I suspected that the examples I saw reflected systemic flaws in the platform’s design and the company’s culture. I did not emphasize the threat to the presidential election, because at that time I

Description: