Table Of ContentW hite Noise

Distribution

Theory

W hite Noise

Distribution

T heory

Hui-Hsiung Kuo

CRC Press

Boca Raton New York London Tokyo

Library of Congress Cataloging-in-Publication Data

Catalog regcord is available from the Library of Congress

This book contains information obtained from authentic and highly regarded sources. Reprinted material

is quoted with permission, and sources are indicated. A wide variety of references are listed. Reasonable

efforts have been made to publish reliable data and information, but the author and the publisher cannot

assume responsibility for the validity of all materials or for the consequences of their use.

Neither this book nor any part may be reproduced or transmitted in any form or by any means,

electronic or mechanical, including photocopying, microfilming, and recording, or by any information

storage or retrieval system, without prior permission in writing from the publisher.

The consent of CRC Press does not extend to copying for general distribution, for promotion, for

creating new works, or for resale. Specific permission must be obtained in writing from CRC Press for

such copying.

Direct all inquiries to CRC Press, Inc., 2000 Corporate Blvd., N.W., Boca Raton, Florida 33431.

© 1996 by CRC Press, Inc.

No claim to original U.S. Government works

International Standard Book Number 0-8493-8077-4

Printed in the United States of America 1 2 3 4 5 6 7 8 9 0

Printed on acid-free paper

Preface

White noise is generally regarded as the time derivative of a Brownian

motion. It does not exist in the ordinary sense since almost all Brownian

sample paths are nowhere differentiable. Informally it can be regarded as a

stochastic process which is independent at different times and is identically

distributed with zero mean and infinite variance. Due to this distinct fea

ture, white noise is often used as an idealization of a random noise which

is independent at different times and has large fluctuation. This leads to

integrals with respect to white noise. Such integrals can not be defined

as Riemann-Stieltjes integrals. In order to overcome this difficulty, K. Ito

developed stochastic integration with respect to Brownian motion in 1944.

The Ito theory of stochastic integration turns out to be one of the most

fruitful branches of mathematics with far-reaching applications.

In the Ito theory of stochastic integration, the integrand needs to be

nonanticipating. It is natural to ask whether it is possible to extend the

stochastic integral wherein the integrand can be anticipating. In the 1970s

such integrals were defined by M. Hitsuda, K. Ito, and A. V. Skorokhod.

Since then there has been a growing interest in studying such integrals and

anticipating stochastic integral equations. There are several approaches to

define these integrals; for instance, the enlargement of filtrations, Malliavin

calculus, and white noise analysis. An intuitive approach is to define such

an integral directly with white noise as part of the integrand. This would

require the building of a rigorous mathematical theory of white noise.

Motivated by P. Lévy’s work on functional analysis, T. Hida introduced

the theory of white noise in 1975. The idea is to realize nonlinear functions

on a Hilbert space as functions of white noise. There are several advantages

for this realization. First of all, white noise can be thought of as a coordinate

system since it is an analogue of independent identically distributed random

variables. This enables us to generalize finite dimensional results in an

intuitive manner. Secondly, we can use the Wiener-Ito theorem to study

functions of white noise. This allows us to define generalized functions of

white noise in a very natural way. Thirdly, since Brownian motion is an

integral of white noise, the use of white noise provides an intrinsic method

to define stochastic integrals without the nonanticipating assumption.

During the last two decades the theory of white noise has evolved into an

Preface

infinite dimensional distribution theory. Its space (£) of test functions is

the infinite dimensional analogue of the Schwartz space 5(R'’) on the finite

dimensional space R'*. The dual space (£)* of (5) is the infinite dimensional

analogue of the space 5'(R'') of tempered distributions on R'’. It contains

generalized functions such as the white noise B{t) for each fixed t. Thus

B(t) is a rigorous mathematical object and can be used for stochastic inte

gration. White noise distribution theory has now been applied to stochastic

integration, stochastic partial differential equations, stochastic variational

equations, infinite dimensional harmonic analysis, Dirichlet forms, quan

tum field theory, Feynman integrals, infinite dimensional rotation groups,

and quantum probability.

There have been two books on white noise distribution theory:

1. T. Hida, H. -H. Kuo, J. Potthoff, and L. Streit: White Noise: An Infinite

Dimensional Calculus, Kluwer Academic Publishers, 1993.

2. N. Obata: White Noise Calculus and Fock Space. Lecture Notes in

Math., Vol. 1577, Springer-Verlag, 1994.

The space (£*)* of generalized functions used in these books is somewhat

limited. By the Potthoff-Streit characterization theorem, the 5-transform

5$ of a generalized function $ in {£)* must satisfy the growth condition

|5$(0I <Kexp [a|i|2].

Thus the function F{^) = exp [(^, is not the 5-transform of a general

ized function in (S)*. However this function is within the scope of Lévy’s

functional analysis. In fact, F(^) = exp is the 5-transform of a

solution of the heat equation associated with the operator (A^)^. Here A^

is the adjoint of the Gross Laplacian A^. This shows that there is a real

need to find a larger space of generalized functions than

Recently, Yu. G. Kondratiev and L. Streit have introduced an increasing

family of spaces (5)^, 0 < ^ < 1, of generalized functions with (S)¡ = (£)*•

In the characterization theorem for $ in (£)’^, the growth condition is

|5$(0l<i^exp[a|e|‘^ ].

For example, the above function F(^) = exp 5-transform of

a generalized function in

There are three objectives for writing this book. The first one is to

carry out white noise distribution theory for the space (5)^ of generalized

functions and the space (S)^ of test functions for each 0 < /? < 1. As

pointed out above, the space (S)* is not large enough for the study of

heat equation associated with the operator In general, F(^) =

exp [f (^, is the 5-transform of a solution of the heat equation associated

with the operator (A^)*'. The solution is a generalized function in the space

Preface

i^){k-i)/k' There are other motivations for the spaces 0 < /? < 1; for

instance, a generalization of Donsker’s delta function in Example 7.7 and

the white noise integral equation in Example 13.46. An important example

of generalized functions in the space is given by the grey noise measure

u\ (see Example 8.5) which has the characteristic function

f

dux{x) = I-A(klo)»

Je»

where La, 0 < A < 1, is the Mittag-Leffler fimction given by

S r ( l + An)-

Here T is the Gamma function. The grey noise measure induces a general

ized function in the space (£^)Î_a*

The second objective is to discuss some of the recent progress on Fourier

transform, Laplacian operators, and white noise integration. The Fourier-

Gauss transforms acting on the space {S)^ of test functions are studied in

detail. The probabilistic interpretation of the Lévy Laplacian is discussed.

The Hitsuda-Skorokhod integral d*(p{8)d8 is defined as a random vari

able, not as a generalized function. The 5-transform is used to solve some

anticipating stochastic integral equations. For instance, consider the antic

ipating stochastic integral equation in Example 13.30

X(i) = sgn(B(l))+ fd;X{s)ds,

Jo

where the anticipation comes from the initial condition. The 5-transform

can be used to derive the solution

X{t) = sgn (B(l) - 1)

Note that X{t) is not continuous in t. Consider another interesting antici

pating stochastic integral equation (see Example 13.35)

X(i) = l+ fd:{B{l)X{s))ds,

Jo

where the anticipation comes from the integrand. We prove an anticipating

Ito’s formula in Theorem 13.21 which can be used to find the solution

X(t) = exp [b (1) ji* dB{s) - ^B(l)2(l - e~^*) - i ].

Preface

On the other hand, we define white noise integrals as Pettis or Bochner

integrals taking values in the space (£^)^ of generalized functions. For ex

ample, Donsker’s delta function can be represented as a white noise integral

(see Example 13.9). This representation is applied to Feynman integrals in

Chapter 14. An existence and uniqueness theorem for white noise integral

equations is given in Theorem 13.43.

The third objective is to give an easy presentation of white noise dis

tribution theory. We have provided motivations and numerous examples.

More importantly, many new ideas and techniques are introduced. For ex

ample, the Wick tensor : x®” : is usually defined by induction. However,

being motivated by Hermite polynomials with a parameter, we find it much

easier to define : directly by

/ n\

:x

k=0 ^ ^

where r is the trace operator. This definition immediately gives the rela

tionship between Wick tensors and Hermite polynomials with a parameter.

The Kubo-Yokoi theorem says that every test function has a continuous ver

sion. A beginner in white noise distribution theory usually finds the proof

of this theorem very hard to understand. We give a rather transparent

proof of this theorem by showing that a test function (p can be represented

as in Equation (6.11) by

(f{x) =

where the renormalization : is a generalized function in (5)* for each

X and 0 is a continuous linear operator from (£)/j into itself for any /?. In

fact, this representation also yields the analytic extension of p and produces

various norm estimates. We have also introduced new ideas and techniques

in the proof of Kondratiev-Streit characterization theorem for generalized

functions in (5)^.

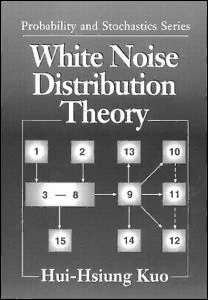

This book is accessible to anyone with a first year graduate course in real

analysis and some knowledge of Hilbert spaces. Prior familiarity with nu

clear spaces and Gel’fand triples is not required. The reader can easily pick

up the necessary background from Chapter 2. Anyone interested in learning

the mathematical theory of white noise can quickly do so from Chapters 3

to 9. Applications of white noise in integral kernel operators, Fourier trans

forms, Laplacian operators, white noise integration, and Feynman integrals

are given in Chapters 10 to 14. Although there are some relationships

among them, these five chapters can be read independently after Chapter

9. The material in Chapter 15 can be read right after Chapter 8. We have

put it after Chapter 13 since white noise integral equations provide a natural

motivation for studying positive generalized functions. In Appendix A we

Preface

give bibliographical notes and comment on several open problems relating

to other fields. Appendix B contains a collection of miscellaneous formulas

which are needed for this book. Some of them are actually proved as lem

mas or derived in examples. They are put together for the convenience of

the reader.

Since this book is intended to be an introduction to white noise distribu

tion theory, we can not cover every aspect of its applications. For example,

we have omitted topics such as quantum probability and Yang-Mills equa

tions, infinite dimensional rotation groups, stochastic partial differential

equations, stochastic variational equations, Dirichlet forms, and quantum

field theory. We only make a few comments on these topics in Appendix

A. On the other hand, we believe that the material in Chapters 3 to 9 will

provide adequate background for applications to many problems which use

white noise. In Appendix A we mention several open problems for further

research. It is our sincere hope that the reader will find white noise dis

tribution theory interesting. If the reader can apply this theory to other

fields, it will be our greatest pleasure.

This book is based on a series of lectures which I gave at the Daewoo

Workshop and at several universities in Korea (Seoul National University,

Sogang University, Sook Myung Women’s University, Korean Advanced

Institute of Science and Technology, Chonnam National University, and

Yonsei University) during the summer of 1994. Toward the end of my visit

Professor D. M. Chung suggested that I should write up my lecture notes,

which eventually became the manuscript for this book. Were it not for

his suggestion, this book would not have materialized. I certainly was not

thinking about writing a book before my visit to Korea.

My visit to Korea was arranged by Professor D. M. Chung with finan

cial support from Daewoo Foundation, Global Analysis Research Center at

Seoul National University, Sogang University, and Sook Myung Women’s

University. I would like to express my gratitude to him and these institu

tions. I want to express my appreciation for the warm hospitality of the

following persons: K. S. Chang, S. J. Chang, D. P. Chi, S. M. Cho, B. D.

Choi, T. S. Chung, U. C. Ji, S. H. Kang, S. J. Kang, D. H. Kim, S. K. Kim,

K. Sim Lee, Y. M. Park, Y. S. Shim, I. S. Wee, and M. H. Woo.

I want to take this opportunity to thank each of the following persons and

their institutions for inviting me to give a series of lectures: B. D. Choi (Ko

rean Advanced Institute of Science and Technology), P. L. Chow (Wayne

State University), T. Hida (Meijo University and Nagoya University), C.

R. Hwang (Academia Sinica), Y.-J. Lee (Cheng Kung University), T. F.

Lin (Soochow University), Y. Okabe (Hokkaido University), R. Rebolledo

(Pontificia Universidad Católica de Chile), H. Sato (Kyushu University),

N. R. Shieh (Taiwan University), L. Streit (Universität Bielefeld), and Y.

Zhang (Fudan University). The lecture notes resulting from these visits

have infiuenced the writing of this book.