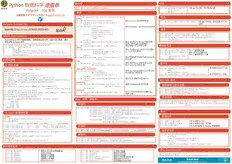

Python数据科学速查表 - Spark SQL 基础 PDF

Preview Python数据科学速查表 - Spark SQL 基础

Python 速查表 重复值 分组 数据科学 >>> df = df.dropDuplicates() >>> df.groupBy("age")\ 呆鸟译 PySpark - SQL .count() \ 按 age 列分组,统计每组人数 基础 .show() www.hellobi.com 查询 天善智能 商业智能与大数据社区 >>> from pyspark.sql import functions as F Select 筛选 >>>>>> ddff..sseelleecctt((""fifirrssttNNaammee""),."slhaoswt(N)a m e " ) \ 显示 firstName 列的所有条目 >>> df.filter(df["age"]>24).show() 按 age 列筛选,保留年龄大于24 PySpark 与 Spark SQL .show() 岁的 >>> df.select("firstName", 显示 firstName、age 的所有条目和类型 "age", Spark SQL 是 Apache Spark 处理结构化数据的模块。 explode("phoneNumber") \ 排序 .alias("contactInfo")) \ .select("contactInfo.type", >>> peopledf.sort(peopledf.age.desc()).collect() "firstName", >>> df.sort("age", ascending=False).collect() 初始化 SparkSession "age") \ >>> df.orderBy(["age","city"],ascending=[0,1])\ SparkSession 用于创建数据框,将数据框注册为表,执行 SQL 查询,缓存 .show() .collect() 表及读取 Parquet 文件。 >>> df.select(df["firstName"],df["age"]+ 1) 显示 firstName 和 age 列的所有 >>>>>> fsrpoamr kp y=s pSaprakr.ksSqels siimopno r\t SparkSession > W> > h ed nf ..ssehloewc(t)( d f [ ' a g e ' ] > 2 4 ) . s h o w ( ) 记显录示,所并有对小 于ag2e4 岁记的录记添录加1 替换缺失值 ....gcbaeupotnipOlNfirdagCem(rre"e (sa\p"taPery(kt).hsoonm eS.pcaornkfi gS.QoLp tbiaosni"c ,e x"asmopmlee-"va)l u\e") \ > > > d f ..ssehloewc(t)(."oFfit.rhwsehtreNwnai(msdeef"(.,0a)g)e \> 30, 1) \ 显1,示小 fi于rs3tN0岁am显e示,0且大于30岁显示 >>> >>> >>> ddd fff ....nnnraaae.. pfid\llr alo c(p e5( (0) 1). 0.s ,sh ho 2ow 0w( )() ) \ 用去用一除一个 个df值值 中替替为换换空空另值值一的个行值 >>> df[df.firstName.isin("Jane","Boris")] 显示符合指定条件的 firstName 列 .show() 创建数据框 Like .collect() 的记录 从 RDD 创建 >>> df.select(d"ffi.rlsatsNtaNmaem"e,.like("Smith")) \ 显的示 fir slatNstaNmame 列e 列的中记包录含 Smith 重分区 .show() >>> from pyspark.sql.types import * Startswith - Endswith >>> df.repartition(10)\ 将 df 拆分为10个分区 >>推>>>>断 sl Scic nh=ee sms p=aa rskc..stpeaxrtkFCiolnet(e"xpteople.txt") >>> df.select(d"ffi..rlssattsaNtraNtmasemw"ei, t\h("Sm")) \ 显firs示tN laasmtNe a列m的e 记列录中以 Sm 开头的 > > > d f...rgcdeodta Nl\uemsPcaer(t1i)t.irodnds.(g)e tNumPartitions() 将 df 合并为1个分区 .show() >>> parts = lines.map(lambda l: l.split(",")) >>>>>> ppeeooppllee d=f p=a rstsp.amrakp.(clraemabtdeaD pa:t aRForwa(mnea(mpee=opp[l0e]),age=int(p[1]))) > S >u >b s dt fri..nssgheolwe(c)t(df.lastName.endswith("th")) \ 显示以 th 结尾的 lastName 运行 SQL 查询 指定 Schema >>> df.select(df.firstName.substr(1, 3) \ 返回 firstName 的子字符串 >>> people = parts.map(lambda p: Row(name=p[0], .alias("name")) \ 将数据框注册为视图 age=int(p[1].strip()))) B e t w e e.ncollect() >>> peopledf.createGlobalTempView("people") >>> schemaString = "name age" >>> df.createTempView("customer") >>> fields = [StructField(field_name, StringType(), True) for >>> df.select(df.age.between(22, 24)) \ 显示介于22岁至24岁之间的 age >>> df.createOrReplaceTempView("customer") field_name in schemaString.split()] .show() 列的记录 >>> schema = StructType(fields) >>> spark.createDataFrame(people, schema).show() 查询视图 +--------+---+ 添加、修改、删除列 | name|age| >>> df5 = spark.sql("SELECT * FROM customer").show() +--------+---+ >>> peopledf2 = spark.sql("SELECT * FROM global_temp.people")\ || FMiilniep|| 2289|| 添加列 .show() |Jonathan| 30| +--------+---+ >>> df = df.withColumn('city',df.address.city) \ .withColumn('postalCode',df.address.postalCode) \ .withColumn('state',df.address.state) \ 输出 从 Spark 数据源创建 .withColumn('streetAddress',df.address.streetAddress) \ JSON .withColumn('telePhoneNumber', explode(df.phoneNumber.number)) \ 数据结构 >>> df = spark.read.json("customer.json") .withColumn('telePhoneType', > +>->- -d-f-.--s-h-o-w-(-)--------+---+---------+--------+--------------------+ explode(df.phoneNumber.type)) >>> rdd1 = df.rdd 将 df 转换为 RDD | address|age|firstName |lastName| phoneNumber| >>> df.toJSON().first() +--------------------+---+---------+--------+--------------------+ >>> df.toPandas() 将 df 转换为 RDD 字符串 ||[[NNeeww YYoorrkk,,1100002211,,NN......|| 2251|| JJoahnne|| S m iDtohe||[[[[231222 585858--11223344,,hhoo......|| 修改列 将 df 的内容转为 Pandas 的数据框 +--------------------+---+---------+--------+--------------------+ >>> df = df.withColumnRenamed('telePhoneNumber', 'phoneNumber') >>> df2 = spark.read.load("people.json", format="json") 保存至文件 >P>>a rqdfu3e t= 文 s件park.read.load("users.parquet") 删除列 > > > d f ..swerlietcet (\"firstName", "city")\ 文本文件 >>> df = df.drop("address", "phoneNumber") .save("nameAndCity.parquet") >>> df4 = spark.read.text("people.txt") >>> df = df.drop(df.address).drop(df.phoneNumber) >>> df.select("firstName", "age") \ .write \ .save("namesAndAges.json",format="json") 查阅数据信息 >>>>>>>>> dddfff...sdhhteoyawpd(e()s) 返返显示回回 d 前df f的 n的内 行列容数名据与数据类型 >>>>>>>>> dddfff...dcceooslucunrmtin(bs)e ( ) . s h o w ( ) 汇返返总回回统 ddff计 的的数列行据名数 终>>>止 s Spparakr.ksSteosps(i)on >>>>>> ddff..fitraskte(()2) 返返回回第前 1n 行行数数据据 >>>>>> ddff..dpirsitnitnSccth(e)m.ac(o)u n t ( ) 返返回回 ddff 的中 不Sc重he复m的a行数 DataCamp >>> df.schema 返回 df 的 Schema >>> df.explain() 返回逻辑与实体方案 原文作者 Learn Python for Data Science Interactively