Proceedings of AMI-ARCS 2009 - Department of Computing PDF

Preview Proceedings of AMI-ARCS 2009 - Department of Computing

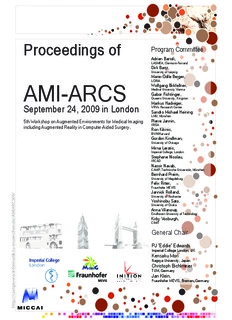

Proceedings of Program Committee Adrien Bartoli, LASMEA, Clermont-Ferrand Dirk Bartz, University of Leipzig Marie-Odile Berger, LORIA AMI-ARCS Wolfgang Birkfellner, Medical University Vienna Gabor Fichtinger, Queens University, Kingston Markus Hadwiger, September 24, 2009 in London VRVis Research Center Sandro Michael Heining LMU, München 5th Workshop on Augmented Environments for Medical Imaging Pierre Jannin, IRISA including Augmented Reality in Computer-Aided Surgery, Ron Kikinis, BWH/Harvard Gordon Kindlman, University of Chicago Mirna Lerotic, Imperial College, London Stephane Nicolau, IRCAD Nassir Navab, CAMP, Technische Universität, München Bernhard Preim, University of Magdeburg Felix Ritter, Fraunhofer MEVIS / Jannick Rolland, 9 University of Rochester 0 CS Yoshinobu Sato, R University of Osaka A MI Anna Vilanova, Eindhoven University of Technology A e/ Kirby Vosburgh, d CIMIT n. e h General Chair c n e u m PJ “Eddie” Edwards - u Imperial College London, UK t k. Kensaku Mori ti a m Nagoya University, Japan r Christoph Bichlmeier o M G nf ori TUM, Germany w.i Jan Klein, w Fraunhofer MEVIS, Bremen, Germany w p m a c / / p: t t h Preface TheseproceedingscovertheAMI/ARCSone-daysatelliteworkshopofMIC- CAI 2009. This workshop continues the tradition of Augmented Reality in Computer Aided Surgery (ARCS) 2003 and the Workshops on Aug- mented environments for Medical Imaging and Computer-aided Surgery (AMI-ARCS) 2004, 2006 and 2008. The workshop is a forum for researchers involvedinallaspectsofaugmentedenvironmentsformedicalimaging. Aug- mented environments aim to provide the physician with enhanced percep- tionofthepatienteitherbyfusingvariousimagemodalitiesorbypresenting medical image data directly on the physicians view, establishing a direct re- lation between the image and the patient. The workshop was divided into foursessions-Applications;EfficiencyinARandRegistration;Visualisation and Ultrasound; and Visualisation and Algorithms. There were three invited speakers. Professor Henry Fuchs from UNC Chapel Hill is one of the pioneers of augmented reality research and pro- vided a highly entertaining look at augmented reality research, both past and present. Professor Eduard Gr¨oller of the Technical University Vienna gave a talk on multimodal image fusion for diagnosis of coronary artery dis- ease. This reflects a growing interest amongst the AMI-ARCS community in multimodal image fusion, which was specifically highlighted as a theme this year. Finally, Dr. Hongen Liao of the University of Tokyo described his significant research experience in augmented reality under the heading of integral videography. AverypopularfeatureofAMI-ARCS2009wastheinclusionofademon- stration session over lunch. This enabled researchers to experience five cut- ting edge systems first hand. It is hoped that this will be a feature of future AMI-ARCS workshops. There was industrial involvement from Ini- tion.co.uk who provided 3D visualisation for the workshop. It is hoped that this relationship may continue. At the end of the meeting there was a panel discussion on the future of both AMI-ARCS itself and AR. AMI/ARCS 2009 brought together clinicians and technical researchers from industry and academia with interests in computer science, electrical engineering, physics, and clinical medicine to present state-of-the-art devel- opments in this ever-growing research area. There is significant ongoing interest in AMI/ARCS, with a steady number of participants and the con- tinued high quality of submissions. We are also pleased with the trend in the research to provide fully functional demonstrations and aiming towards real clinical applications that will be of benefit to patients. September 24th 2009 PJ “Eddie” Edwards Christoph Bichlmeier Jan Klein Kensaku Mori Program Chairs AMI-ARCS 2009 Program Committee Adrien Bartoli, LASMEA, Clermont-Ferrand Dirk Bartz, University of Leipzig Marie-Odile Berger, LORIA Wolfgang Birkfellner, Medical University Vienna Gabor Fichtinger, Queens University, Kingston Markus Hadwiger, VRVis Research Center Sandro-Michael Heining, LMU, Mu¨nchen Pierre Jannin, IRISA Ron Kikinis, BWH/Harvard Gordon Kindlman, University of Chicago Mirna Lerotic, Imperial College, London Stephane Nicolau, IRCAD Nassir Navab,CAMP, Technische Universitt, Mu¨nchen Bernhard Preim, University of Magdeburg Felix Ritter, Fraunhofer MEVIS Jannick Rolland, University of Rochester Yoshinobu Sato, University of Osaka Anna Vilanova, Eindhoven University of Technology Kirby Vosburgh,CIMIT Sponsoring Institutions Imperial College, London, UK TUM, Germany Fraunhofer MEVIS, Bremen, Germany Nagoya University, Japan Inition.co.uk London, UK Table of Contents Session 1 – Applications 1 AR-Assisted navigation: applications in anesthesia and cardiac interventions. John Moore, Cristian A. Linte, Chris Wedlake and Terry M. Peters 2 Development of Endoscopic Robot System with Augmented Reality Functions for NOTES that Enables Activation of FourRoboticForceps. NaokiSuzuki, AsakiHattori, KazuoTanoue, SatoshiIeiri, KozoKonishi, HajimeKenmotsuandMakotoHashizume 12 A Practical Approach for Intraoperative Contextual In-Situ Visualization. Christoph Bichlmeier, Maksym Kipot, Stuart Hold- stock, Sandro-Michael Heining, Ekkehard Euler and Nassir Navab 19 Session 2 – Efficiency in AR and Registration 27 Interactive 3D auto-stereoscopic image guided surgical naviga- tion system with GPU accelerated high-speed processing. Huy Hoang Tran, Hiromasa Yamasita, Ken Masamune, Takeyoshi Dohi and Hongen Liao 28 Fast non-rigid registration of medical image data for image guided surgery. S.K. Shah, R.S. Rowland, and R.J. Lapeer 36 Statistical deformation model driven atlas registration with stochastic sub-sampling. Wenzhe Shi, Lin Mei, Daniel Ru¨ckert and P.J. “Eddie” Edwards 44 5 Session 3 – Visualisation and Ultrasound 52 Intraoperative ultrasound probe calibration in a sterile envi- ronment. Ramtin Shams, Rau´l San Jose Estepar, Vaibhav Patil, Kirby G. Vosburgh 53 RealtimeIntegralVideographyAuto-stereoscopicSurgeryNav- igation System using Intra-operative 3D Ultrasound: Sys- tem Design and In-vivo Feasibility Study. Nicholas Herlam- bang, HiromasaYamashita, HongenLiao, KenMasamuneandTakeyoshi Dohi 61 A Movable Tomographic Display for 3D Medical Images. Gau- ravShukla, BoWang, JohnGaleotti, RobertaKlatzky, BingWu, Bert Unger, Damion Shelton, Brian Chapman and George Stetten 69 Session 4 – Visualisation and Algorithms 77 Patient-specificTextureBlendingonSurfacesofArbitraryTopol- ogy. Johannes Totz, Adrian J. Chung and Guang-Zhong Yang 78 Improving Tracking Accuracy Using Neural Networks in Aug- mented Reality Environments. Arun Kumar Raj Voruganti, Oliver Burgert and Dirk Bartz 86 UsingPhoto-consistencyforIntra-operativeRegistrationinAug- mentedRealitybasedSurgicalNavigation. G.Gonzalez-Garcia and Rudy Lapeer 94 EKF Monocular SLAM 3D Modeling, Measuring and Aug- mented Reality from Endoscope Image Sequences. Oscar G. Grasa, J. Civera, A. Gu¨emes, V. Mun˜oz, and J.M.M. Montiel 102 Demonstration Session – Summaries 110 Medical Contextual In-Situ Visualization. Christoph Bichlmeier, Maksym Kipot, Stuart Holdstock, Sandro-Michael Heining, Ekkehard Euler and Nassir Navab 111 Medical Augmented Reality using Autostereoscopic Image for Minimally Invasive Surgery. Hongen Liao 113 Miniaturised Augmented Reality System (MARS). PJ “Eddie” Edwards, AakifTanveer, TimCarter, DeanBarrattandDaveHawkes115 ARView - Augmented Reality based Surgical Navigation sys- tem software for minimally invasive surgery. Rudy Lapeer and G. Gonzalez-Garcia 117 PerkStation-PercutaneousSurgeryTrainingandPerformance Measurement Platform. Paweena U-Thainual, Siddharth Vikal, JohnA.Carrino, IulianIordachita, GregoryS.Fischer, GaborFichtinger119 Author Index 121 Session 1 – Applications 1 Overview of AR-Assisted Navigation: Applications in Anesthesia and Cardiac Therapy John Moore1, Cristian A. Linte1,2, Chris Wedlake1, and Terry M. Peters1,2 Imaging Research Laboratories, Robarts Research Institute, Biomedical Engineering Graduate Program, University of Western Ontario, London ON Canada {jmoore,tpeters}@imaging.robarts.ca Abstract. Theglobalfocusonminimizinginvasivenessassociatedwith conventionaltreatmenthasinevitablyledtorestrictedaccesstothetar- get tissues. Complex procedures that previously allowed surgeons to di- rectlyseeandaccesstargetswithinthehumanbodymustnowbe“seen” and“reached”viamedicalimagingandspeciallydesignedtools,respec- tively. A successful transition to less invasive therapy is subject to the ability to provide physicians with sufficient navigation information that allows them to maintain therapy efficacy despite “synthetic” visualiza- tionandtargetmanipulation.Herewedescribeacomprehensivesystem currentlyunderdevelopmentthatintegratestheprinciplesofaugmented reality-assisted navigation into clinical practice. To illustrate its direct benefits in the clinic, we describe the implementation of the system for an anesthetic delivery application, as well as for intracardiac therapy. 1 Introduction Inmanyengineeringapplications,computergenerateddisplaysareusedtomodel mechanical designs, simulate interaction between different components, and vir- tually analyze a system’s behaviour prior to its implementation; however, these modelsarerarelycarriedfurtherintothephysicalimplementationofthesystem. Similarly, in the medical world, pre-operative information is acquired in order to diagnose a patient’s condition, but it is often limited to the treatment plan rather than being integrated into the therapy delivery stage. Augmented reality (AR)technologypermitsthesupplyofadditionalinformationtothevisualfield of a user in order to facilitate, or in some cases enable task performance [1]. Using such environments, pre-operative anatomical models featuring the entire treatment plan can be “brought in” during therapy, facilitating treatment or providing physicians with otherwise unavailable information. Here we build upon the typical AR concepts towards more complex environ- ments.Asopposedtousingadirectviewoftheanatomyas“reality”,weemploy ultrasound (US) imaging to obtain a real-time anatomical display, and further augment the echo images with pre-operative anatomical information and repre- sentations of surgical tools. The generated environment not only complements 2 the real-time US display with pre-operatively planned information, but also al- lowsthephysiciantoimmersehim/herselfwithina“mixedreality”environment accurately registered to the patient. Wehavedemonstratedtheimplementationofthistechnologyforintracardiac guidance[2],andhavealreadyidentifiedthepotentialthatsuchAR-assistedUS guidance environments may eliminate the need for intra-operative fluoroscopic imaging and allow the fusion of surgical planning and guidance [3,4]. In this paper we review the components of the AR-assisted platform and, in addition to the cardiac application, we also present its implementation for an anesthetic delivery application: facet joint injections and peripheral nerve block. 2 Surgical Platform Architecture And Applications Oursurgicalguidanceplatform(AtamaiViewer-http://www.atamai.com)com- prises a user interface based on Python and the Visualization Toolkit (VTK) and integrates a wide variety components for IGS applications, including multi- modalityimagevisualization,anatomicalmodeling,surgicaltracking,andhaptic control. The environment supports stereoscopic visualization of volumetric data via cine sequences synchronized with the intra-operative ECG [5], as well as fusion of multiple components with different translucency levels for overlays. Theoriginsofouraugmentedrealitysurgicalplatformarisefromthedesireof oursurgerycolleaguestodevelopaprocedurethatenablestherapydeliveryinside the beating heart. Not only does this attempt require a means of introducing multiple tools into the cardiac chambers, but also the ability to visualize and manipulate these devices in real time. Consequently, we adapted these original techniquesforotherapplications,includingelectro-physiologymappingforatrial fibrillation therapy [3] and an AR system for port placement [6]. 2.1 Surgical Guidance: Intracardiac Therapy Delivery OurfirstandmostchallengingAR-assistedapplicationistheplanningandguid- ance of minimally invasive, off-pump mitral valve (MV) implantation and atrial septal defect (ASD) repair. Given the lack of visualization during off-pump surgery, we used this platform to build an AR environment to provide surgeons with a virtual display of the surgical field that resembles the real intracardiac environment to which they do not have direct visual access. We proposed an intracardiac visualization approach that relied on intra- operative echocardiography for real-time imaging, augmented with representa- tions of the surgical tools tracked in real-time and displayed within anatomical context available from pre-operative images. By carefully integrating all compo- nents, such an environment is capable of expected to provide reliable tool-to- target navigation, followed by accurate on-target positioning. Using Imaging to “See” Ultrasound is widely employed as a standard inter- ventional imaging modality and 2D TEE (trans-esophageal echocardiography) 3

Description: