Owen Arden PDF

Preview Owen Arden

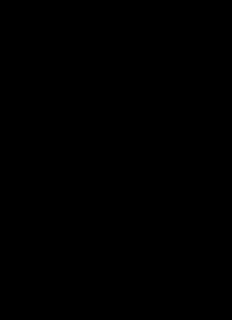

MIT Open Access Articles Automatic partitioning of database applications The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation: Alvin Cheung, Samuel Madden, Owen Arden, and Andrew C. Myers. 2012. Automatic partitioning of database applications. Proc. VLDB Endow. 5, 11 (July 2012), 1471-1482. As Published: http://dx.doi.org/10.14778/2350229.2350262 Publisher: Association for Computing Machinery (ACM) Persistent URL: http://hdl.handle.net/1721.1/90386 Version: Author's final manuscript: final author's manuscript post peer review, without publisher's formatting or copy editing Terms of use: Creative Commons Attribution-Noncommercial-Share Alike Automatic Partitioning of Database Applications Alvin Cheung ∗ Owen Arden† Samuel Madden Andrew C. Myers MITCSAIL DepartmentofComputerScience, CornellUniversity {akcheung, madden}@csail.mit.edu {owen, andru}@cs.cornell.edu ABSTRACT then sends commands to the database server, typically on a sep- aratephysicalmachine,toinvoketheseblocksofcode. Database-backed applications are nearly ubiquitous in our daily Storedprocedurescansignificantlyreducetransactionlatencyby lives. Applicationsthatmakemanysmallaccessestothedatabase avoidingroundtripsbetweentheapplicationanddatabaseservers. createtwochallengesfordevelopers:increasedlatencyandwasted These round trips would otherwise be necessary in order to exe- resourcesfromnumerousnetworkroundtrips.Awell-knowntech- cutetheapplicationlogicfoundbetweensuccessivedatabasecom- niquetoimprovetransactionaldatabaseapplicationperformanceis mands.Theresultingspeedupcanbesubstantial.Forexample,ina to convert part of the application into stored procedures that are JavaimplementationofaTPC-C-likebenchmark—whichhasrel- executedonthedatabaseserver. Unfortunately,thisconversionis ativelylittleapplicationlogic—runningeachTPC-Ctransactionas oftendifficult. InthispaperwedescribePyxis,asystemthattakes astoredprocedurecanofferuptoa3×reductioninlatencyversus database-backedapplicationsandautomaticallypartitionstheircode runningeachSQLcommandviaaseparateJDBCcall.Thisreduc- intotwopieces,oneofwhichisexecutedontheapplicationserver tionresultsina1.7×increaseinoveralltransactionthroughputon and the other on the database server. Pyxis profiles the applica- thisbenchmark. tionandserverloads,staticallyanalyzesthecode’sdependencies, However,storedprocedureshaveseveraldisadvantages: andproducesapartitioningthatminimizesthenumberofcontrol • Portabilityandmaintainability: Storedproceduresbreaka transfers as well as the amount of data sent during each trans- straight-lineapplicationintotwodistinctandlogicallydisjointcode fer. Our experiments using TPC-C and TPC-W show that Pyxis bases. Thesecodebasesareusuallywrittenindifferentlanguages isabletogeneratepartitionswithupto3×reductioninlatencyand andmustbemaintainedseparately.Stored-procedurelanguagesare 1.7× improvement in throughput when compared to a traditional oftendatabase-vendorspecific,makingapplicationsthatusethem non-partitioned implementation and has comparable performance less portable between databases. Programmers are less likely to tothatofacustomstoredprocedureimplementation. befamiliarwithorcomfortableinlow-level—evenarcane—stored procedure languages like PL/SQL or TransactSQL, and tools for 1. INTRODUCTION debugging and testing stored procedures are less advanced than Transactionaldatabaseapplicationsareextremelylatencysensi- thoseformorewidelyusedlanguages. tivefortworeasons.First,inmanytransactionalapplications(e.g., • Conversion effort: Identifying sections of application logic database-backedwebsites),thereistypicallyahardresponsetime that are good candidates for conversion into stored procedures is limitofafewhundredmilliseconds,includingthetimetoexecute tricky. In order to design effective stored procedures, program- applicationlogic,retrievequeryresults,andgenerateHTML.Sav- mersmustidentifysectionsofcodethatmakemultiple(orlarge) ingevenafewtensofmillisecondsoflatencypertransactioncanbe database accesses and can be parameterized by relatively small importantinmeetingtheselatencybounds.Second,longer-latency amountsofinput.Weighingtherelativemeritsofdifferentdesigns transactionsholdlockslonger,whichcanseverelylimitmaximum requiresprogrammerstomodelormeasurehowoftenastoredpro- systemthroughputinhighlyconcurrentsystems. cedureisinvokedandhowmuchparameterdataneedtobetrans- Storedproceduresareawidelyusedtechniqueforimprovingthe ferred,bothofwhicharenontrivialtasks. latencyofdatabaseapplications.Theideabehindstoredprocedures • Dynamic server load: Running parts of the application as istorewritesequencesofapplicationlogicthatareinterleavedwith storedproceduresisnotalwaysagoodidea. Ifthedatabaseserver databasecommands(e.g.,SQLqueries)intoparameterizedblocks isheavilyloaded,pushingmorecomputationintoitbycallingstored of code that are stored on the database server. The application procedures will hurt rather than help performance. A database ∗SupportedbyaNSFFellowship server’s load tends to change over time, depending on the work- †SupportedbyaDoDNDSEGFellowship load and the utilization of the applications it is supporting, so it isdifficultfordeveloperstopredicttheresourcesavailableonthe servershostingtheirapplications. Evenwithaccuratepredictions theyhavenoeasywaytoadapttheirprograms’useofstoredpro- cedurestoadynamicallychangingserverload. Weproposethatthesedisadvantagesofmanuallygeneratedstored procedures can be avoided by automatically identifying and ex- tractingapplicationcodetobeshippedtothedatabaseserver. We implemented this new approach in Pyxis, a system that automat- ically partitions a database application into two pieces, one de- Application ployedontheapplicationserverandtheotherinthedatabaseserver source as stored procedures. The two programs communicate with each otherviaremoteprocedurecalls(RPCs)toimplementtheseman- Instrumentor Static Analyzer tics of the original application. In order to generate a partition, Pyxis first analyzes application source code using static analysis Normalized Analysis andthencollectsdynamicinformationsuchasruntimeprofileand Instrumented source results machineloads.Thecollectedprofiledataandresultsfromtheanal- source Partitioner ysisarethenusedtoformulatealinearprogramwhoseobjectiveis tominimize,subjecttoamaximumCPUload,theoveralllatency PyxIL due to network round trips between the application and database source serversaswellastheamountofdatasentduringeachroundtrip. Profile The solved linear program then yields a fine-grained, statement- information PyxILCompiler levelpartitioningoftheapplication’ssourcecode. Thepartitioned code is split into two halves and executed on the application and Partitioned Partitioned databaseserversusingthePyxisruntime. program program Server load information Themainbenefitofourapproachisthatthedeveloperdoesnot needtomanuallydecidewhichpartofherprogramshouldbeexe- Source Pyxis Pyxis Load cutedwhere. Pyxisidentifiesgoodcandidatecodeblocksforcon- Profiler Runtime Runtime Profiler RPC version to stored procedures and automatically produces the two distinctpiecesofcodefromthesingleapplicationcodebase.When theapplicationismodified,Pyxiscanautomaticallyregenerateand redeploythis code. Byperiodicallyre-profilingtheir application, developerscangeneratenewpartitionsasloadontheserverorap- Application Server Database Server plication code changes. Furthermore, the system can switch be- Figure1:Pyxisarchitecture tweenpartitionsasnecessarybymonitoringthecurrentserverload. Pyxismakesseveralcontributions: result is two separate programs, one that runs on the application 1. Wepresentaformulationforautomaticallypartitioningpro- server and one that runs on the database server. These two pro- gramsintostoredproceduresthatminimizeoveralllatencysubject gramscommunicatewitheachotherasnecessarytoimplementthe toCPUresourceconstraints. Ourformulationleveragesacombi- originalapplication’ssemantics.Executionstartsatthepartitioned nationofstaticanddynamicprogramanalysistoconstructalinear programontheapplicationserverbutperiodicallyswitchestoits optimizationproblemwhosesolutionisourdesiredpartitioning. counterpart on the database server, and vice versa. We refer to 2. We develop an execution model for partitioned applications theseswitchesascontroltransfers. Eachstatementintheoriginal where consistency of the distributed heap is maintained by auto- programisassignedaplacementinthepartitionedprogramonei- maticallygeneratingcustomsynchronizationoperations. thertheapplicationserverorthedatabaseserver.Controltransfers 3. Weimplementamethodforadaptingtochangesinreal-time occurwhenastatementwithoneplacementisfollowedbyastate- serverloadbydynamicallyswitchingbetweenpre-generatedparti- mentwithadifferentplacement. Followingacontroltransfer,the tionscreatedusingdifferentresourceconstraints. calling program blocks until the callee returns control. Hence, a 4. WeevaluateourPyxisimplementationontwopopulartrans- singlethreadofcontrolismaintainedacrossthetwoservers. action processing benchmarks, TPC-C and TPC-W, and compare Althoughthetwopartitionedprogramsexecuteindifferentad- theperformanceofourpartitionstotheoriginalprogramandver- dressspaces,theysharethesamelogicalheapandexecutionstack. sionsusingmanuallycreatedstoredprocedures. Ourresultsshow Thisprogramstateiskeptinsyncbytransferringheapandstack Pyxiscanautomaticallypartitiondatabaseprogramstogetthebest updatesduringeachcontroltransferorbyfetchingupdatesonde- ofbothworlds:whenCPUresourcesareplentiful,Pyxisproducesa mand.TheexecutionstackismaintainedbythePyxisruntime,but partitionwithcomparableperformancetothatofhand-codedstored theprogramheapiskeptinsyncbyexplicitheapsynchronization procedures;whenresourcesarelimited,itproducesapartitioncom- operations,generatedusingaconservativestaticprogramanalysis. parabletosimpleclient-sidequeries. Static dependency analysis. The goal of partitioning is to pre- Therestofthepaperisorganizedasfollows.Westartwithanar- servetheoriginalprogramsemanticswhileachievinggoodperfor- chitecturaloverviewofPyxisinSec.2.WedescribehowPyxispro- mance. Thisisdonebyreducingthenumberofcontroltransfers gramsexecuteandsynchronizedatainSectionSec.3. Wepresent andamountofdatasentduringtransfersasmuchaspossible. The theoptimizationproblemanddescribehowsolutionsareobtained firststepistoperformaninterproceduralstaticdependencyanaly- inSec.4. Sec.5explainsthegenerationofpartitionedprograms, sisthatdeterminesthedataandcontroldependenciesbetweenpro- andSec.6describesthePyxisruntimesystem. Sec.7showsour gramstatements.Thedatadependenciesconservativelycaptureall experimentalresults,followedbyrelatedworkandconclusionsin datathatmaybenecessarytosendifadependentstatementisas- Sec.8andSec.9. signedtoadifferentpartition.Thecontroldependenciescapturethe 2. OVERVIEW necessary sequencing of program statements, allowing the Pyxis codegeneratortofindthebestprogrampointsforcontroltransfers. Figure1showsthearchitectureofthePyxissystem.Pyxisstarts The results of the dependency analysis are encoded in a graph with an application written in Java that uses JDBC to connect to the database and performs several analyses and transformations.1 form that we call a partition graph. It is a program dependence graph(PDG)2 augmentedwithextraedgesrepresentingadditional TheanalysisusedinPyxisisgeneralanddoesnotimposeanyre- information. A PDG-like representation is appealing because it strictions on the programming style of the application. The final 1WechoseJavaduetoitspopularityinwritingdatabaseapplica- 2Sinceitisinterprocedural,itactuallyisclosertoasystemdepen- tions.Ourtechniquescanbeappliedtootherlanguagesaswell. dencegraph[14,17]thanaPDG. combinesbothdataandcontroldependenciesintoasinglerepre- 1 class Order { sentation.UnlikeaPDG,apartitiongraphhasaweightthatmodels 2 int id; thecostofsatisfyingtheedge’sdependenciesiftheedgeisparti- 3 double[] realCosts; tionedsothatitssourceanddestinationlieondifferentmachines. 4 double totalCost; 5 Order(int id) { The partition graph is novel; previous automatic partitioning ap- 6 this.id = id; proacheshavepartitionedcontrol-flowgraphs[34, 8]ordataflow 7 } graphs [23, 33]. Prior work in automatic parallelization [28] has 8 void placeOrder(int cid, double dct) { also recognized the advantages of PDGs as a basis for program 9 totalCost = 0; representation. 10 computeTotalCost(dct); 11 updateAccount(cid, totalCost); Profiledatacollection. Althoughthestaticdependencyanalysis 12 } defines the structure of dependencies in the program, the system 13 void computeTotalCost(double dct) { 14 int i = 0; needstoknowhowfrequentlyeachprogramstatementisexecuted 15 double[] costs = getCosts(); in order to determine the optimal partition; placing a “hot” code 16 realCosts = new double[costs.length]; fragmentonthedatabaseservermayincreaseserverloadbeyond 17 for (itemCost : costs) { its capacity. In order to get a more accurate picture of the run- 18 double realCost; timebehavioroftheprogram,statementsinthepartitiongraphare 19 realCost = itemCost * dct; weightedbyanestimatedexecutioncount.Additionally,eachedge 20 totalCost += realCost; 21 realCosts[i++] = realCost; isweightedbyanestimatedlatencycostthatrepresentsthecom- 22 insertNewLineItem(id, realCost); municationoverheadfordataorcontroltransfers.Bothweightsare 23 } capturedbydynamicprofilingoftheapplication. 24 } Forapplicationsthatexhibitdifferentoperatingmodes,suchas 25 } Figure2:Runningexample thebrowsingversusshoppingmixinTPC-W,eachmodecouldbe profiledseparatelytogeneratepartitionssuitableforit. ThePyxis ingacustomremoteprocedurecallmechanism.TheRPCinterface runtimeincludesamechanismtodynamicallyswitchbetweendif- includesoperationsforcontroltransferandstatesynchronization. ferentpartitioningsbasedoncurrentCPUload. The runtime also periodically measures the current CPU load on thedatabaseservertosupportdynamic,adaptiveswitchingamong Optimization as integer programming. Using the results from differentpartitioningsoftheprogram. Theruntimeisdescribedin thestaticanalysisanddynamicprofiling,thePyxispartitionerfor- moredetailinSec.6. mulates the placement of each program statement and each data fieldintheoriginalprogramasanintegerlinearprogrammingprob- 3. RUNNINGPYXISPROGRAMS lem. Theseplacementsthendrivethetransformationoftheinput sourceintotheintermediatelanguagePyxIL(forPYXisIntermedi- Figure2showsarunningexampleusedtoexplainPyxisthrough- ateLanguage).ThePyxILprogramisverysimilartotheinputJava out the paper. It is meant to resemble a fragment of the new- programexceptthateachstatementisannotatedwithitsplacement, order transaction in TPC-C, modified to exhibit relevant features :APP:or:DB:,denotingexecutionontheapplicationordatabase of Pyxis. The transaction retrieves the order that a customer has server. Thus,PyxILcompactlyrepresentsadistributedprogramin placed, computes its total cost, and deducts the total cost from asingleunifiedrepresentation. PyxILcodealsoincludesexplicit the customer’s account. It begins with a call to placeOrder on heap synchronization operations, which are needed to ensure the behalf of a given customer cid at a given discount dct. Then consistencyofthedistributedheap. computeTotalCost extracts the costs of the items in the order In general, the partitioner generates several different partition- using getCosts, and iterates through each of the costs to com- ingsoftheprogramusingmultipleserverinstructionbudgetsthat pute a total and record the discounted cost. Finally, control re- specify upper limits on how much computation may be executed turnstoplaceOrder,whichupdatesthecustomer’saccount. The atthedatabaseserver. Generatingmultiplepartitionswithdiffer- twooperationsinsertNewLineItemandupdateAccountupdate ent resource constraints enables automatic adaptation to different the database’s contents while getCosts retrieves data from the levelsofserverload. database.IfthereareNitemsintheorder,theexamplecodeincurs NroundtripstothedatabasefromtheinsertNewLineItemcalls, CompilationfromPyxILtoJava. Foreachpartitioning,thePyxIL andtwomorefromgetCostsandupdateAccount. compilertranslatesthePyxILsourcecodeintotwoJavaprograms, Therearemultiplewaystopartitionthefieldsandstatementsof oneforeachruntime.Theseprogramsarecompiledusingthestan- this program. An obvious partitioning is to assign all fields and dardJavacompilerandlinkedwiththePyxisruntime.Thedatabase statementstotheapplicationserver. Thiswouldproducethesame partitionprogramisruninanunmodifiedJVMcolocatedwiththe numberofremoteinteractionsasinthestandardJDBC-basedim- databaseserver,andtheapplicationpartitionissimilarlyrunonthe plementation. Attheotherextreme,apartitioningmightplaceall application server. While not exactly the same as running tradi- statementsonthedatabaseserver,ineffectcreatingastoredproce- tionalstoredproceduresinsideaDBMS,ourapproachissimilarto durefortheentireplaceOrdermethod. Asinatraditionalstored otherimplementationsofstoredproceduresthatprovideaforeign procedure,eachtimeplaceOrderiscalledthevaluescidanddct language interface such as PL/Java [1] and execute stored proce- mustbeserializedandsenttotheremoteruntime. Otherpartition- duresinaJVMexternaltotheDBMS.Wefindthatrunningthepro- ingsarepossible.PlacingonlytheloopincomputeTotalCoston gramoutsidetheDBMSdoesnotsignificantlyhurtperformanceas thedatabasewouldsaveN roundtripsifnoadditionalcommuni- longasitiscolocated. Withmoreengineeringeffort,thedatabase cationwerenecessarytosatisfydatadependencies. Ingeneral,as- partitionprogramcouldruninthesameprocessastheDBMS. signingmorecodetothedatabaseservercanreducelatency,butit Executingpartitionedprograms. Theruntimesfortheapplica- alsocanputadditionalloadonthedatabase. Pyxisaimstochoose tionserveranddatabaseservercommunicateoverTCPsocketsus- partitionings that achieve the smallest latency possible using the currentavailableresourcesontheserver. 1 class Order { 3.2 StateSynchronization 2 :APP: int id; Although all data in PyxIL has an assigned placement, remote 3 :APP: double[] realCosts; 4 :DB: double totalCost; datamaybetransferredtoorupdatedbyanyhost.Eachhostmain- 5 Order(int id) { tainsalocalheapforfieldsandarraysplacedatthathostaswell 6 :APP: this.id = id; as a remote cache for remote data. When a field or array is ac- 7 :APP: sendAPP(this); cessed,thecurrentvalueinthelocalheaporremotecacheisused. 8 } Hostssynchronizetheirheapsusingeagerbatchedupdates;mod- 9 void placeOrder(int cid, double dct) { ificationsareaggregatedandsentoneachcontroltransfersothat 10 :APP: totalCost = 0; 11 :APP: sendDB(this); accesses made by the remote host are up to date. The host’s lo- 12 :APP: computeTotalCost(dct); cal heap is kept up to date whenever that host is executing code. 13 :APP: updateAccount(cid, totalCost); Whenahostexecutesstatementsthatmodifyremotelypartitioned 14 } datainitscache,thoseupdatesmustbetransferredonthenextcon- 15 void computeTotalCost(double dct) { troltransfer. Forthelocalheap,however,updatesonlyneedtobe 16 int i; double[] costs; senttotheremotehostbeforetheyareaccessed. Ifstaticanalysis 17 :APP: costs = getCosts(); 18 :APP: realCosts = new double[costs.length]; determinesnosuchaccessoccurs,noupdatemessageisrequired. 19 :APP: sendAPP(this); Insomescenarios,eagerlysendingupdatesmaybesuboptimal. 20 :APP: sendNative(realCosts,costs); If the amount of latency incurred by transferring unused updates 21 :APP: i = 0; exceedsthecostofanextraroundtripcommunication,itmaybe 22 for (:DB: itemCost : costs) { bettertorequestthedatalazilyasneeded. Inthiswork, weonly 23 double realCost; generatePyxILprogramsthatsendupdateseagerly,butinvestigat- 24 :DB: realCost = itemCost * dct; 25 :DB: totalCost += realCost; inghybridupdatestrategiesisaninterestingfuturedirection. 26 :DB: sendDB(this); PyxIL programs maintain the above heap invariants using ex- 27 :DB: realCosts[i++] = realCost; plicitsynchronizationoperations.Recallthatclassesarepartitioned 28 :DB: sendNative(realCosts); intotwopartialclasses,oneforAPPandoneforDB.ThesendAPP 29 :DB: insertNewLineItem(id, realCost); operationsendstheAPPpartofitsargumenttotheremotehost.In 30 } Fig.3,line7sendsthecontentsofidwhileline26sendstotalCost 3321 }} and the array reference realCosts. Since arrays are placed dy- Figure3:APyxILversionoftheOrderclass namically based on their allocation site, a reference to an array may alias both locally and remotely partitioned arrays. On line 3.1 APyxILPartitioning 20, the contents of the array realCosts allocated at line 18 are senttothedatabaseserverusingthesendNativeoperation3. The Figure3showsPyxILcodeforonepossiblepartitioningofour sendNativeoperationisalsousedtotransferunpartitionednative runningexample.PyxILcodemakesexplicittheplacementofcode Javaobjectsthatareserializable. Likearrays, nativeJavaobjects anddata,aswellasthesynchronizationofupdatestoadistributed areassignedlocationsbasedontheirallocationsite. heap, but keeps the details of control and data transfers abstract. Send operations are batched together and executed at the next Field declarations and statements in PyxIL are annotated with a controltransfer. EventhoughthesendDBoperationonline26and placement label (:APP: or :DB:). The placement of a statement the sendNative operation on line 28 are inside a for loop, the indicateswheretheinstructionisexecuted. Forfielddeclarations, updates will only be sent when control is transferred back to the the placement indicates where the authoritative value of the field applicationserver. resides. However, a copy of a field’s value may be found on the remoteserver. Thesynchronizationprotocolsusingaconservative 4. PARTITIONINGPYXISCODE programanalysisensurethatthiscopyisuptodateifitmightbe usedbeforethenextcontroltransfer.Thus,eachobjectapparentat Pyxisfindsapartitioningforauserprogrambygeneratingparti- thesourcelevelisrepresentedbytwoobjects,oneateachserver. tionswithrespecttoarepresentativeworkloadthatauserwishesto WerefertotheseastheAPPandDBpartsoftheobject. optimize. Theprogramisprofiledusingthisworkload,generating Intheexamplecode,thefieldidisassignedtotheapplication inputsthatpartitioningisbasedupon. server,indicatedbythe:APP:placementlabel,butfieldtotalCost is assigned to the database, indicated by :DB:. The array allo- 4.1 Profiling catedonline18isplacedontheapplicationserver. Allstatements Pyxisprofilestheapplicationinordertobeabletoestimatethe are placed on the application server except for the for loop in size of data transfers and the number of control transfers for any computeTotalCost.Whencontrolflowsbetweentwostatements particular partitioning. To this end, statements are instrumented withdifferentplacements,acontroltransferoccurs. Forexample, tocollectthenumberoftimestheyareexecuted, andassignment online21inFig.3,executionissuspendedattheapplicationserver expressions are instrumented to measure the average size of the andresumesatline22onthedatabaseserver. assignedobjects. Theapplicationisthenexecutedforaperiodof Arraysarehandleddifferentlyfromobjects.Theplacementofan time to collect data. Data collected from profiling is used to set arrayisdefinedbyitsallocationsite: thatis,theplacementofthe weightsinthepartitiongraph. statementallocatingthearray. Thisapproachmeansthecontents This profile need not perfectly characterize the future interac- ofanarraymaybeassignedtoeitherpartition,buttheelementsare tionsbetweenthedatabaseandtheapplication,butagrosslyinac- notsplitbetweenthem. Additionally,sincethestackissharedby curateprofilecouldleadtosuboptimalperformance. Forexample, bothpartitions,methodparametersandlocalvariabledeclarations with inaccurate profiler data, Pyxis could choose to partition the donothaveplacementlabelsinPyxIL. programwhereitexpectsfewcontroltransfers,wheninrealitythe 3Forpresentationpurposes,thecontentsofcostsisalsosenthere. ThisoperationwouldtypicallyoccurinthebodyofgetCosts(). programmightexercisethatpieceofcodeveryfrequently.Forthis Control Data double[] realCosts; reason, developersmayneedtore-profiletheirapplicationsifthe Update 3 realCosts workloadchangesdramaticallyandhavePyxisdynamicallyswitch for(itemCost : costs) 17 amongthedifferentpartitions. realCosts = new double[costs.length]; itemCost 16 4.2 ThePartitionGraph realCosts realCosts Afterprofiling,thenormalizedsourcefilesaresubmittedtothe double totalCost; 4 realCost = itemCost * dct;19 elements partitionertoassignplacementsforcodeanddataintheprogram. totalCost realCost realCost realCost First, thepartitionerperformsanobject-sensitivepoints-toanaly- totalCost += realCost; insertNewLineItem(id, realCost); realCosts[i++] = realCost; sis[22]usingtheAccrueAnalysisFramework[7]. Thisanalysis 20 22 21 approximatesthesetofobjectsthatmaybereferencedbyeachex- pressionintheprogram.Usingtheresultsofthepoints-toanalysis, Figure4:ApartitiongraphforpartofthecodefromFig.2 an interprocedural def/use analysis links together all assignment statements (defs) with expressions that may observe the value of proportionaltothenumberoftimesthedependencyissatisfied.Let thoseassignments(uses). Next,acontroldependencyanalysis[3] cnt(s)bethenumberoftimesstatementswasexecutedinthepro- linksstatementsthatcausebranchesinthecontrolflowgraph(i.e., file. Givenacontrolordataedgefromstatementsrc tostatement ifs,loops,andcalls)withthestatementswhoseexecutiondepends dst,weapproximatethenumberoftimestheedgeewassatisfied onthem. Forinstance,eachstatementinthebodyofaloophasa ascnt(e)=min(cnt(src),cnt(dst)). controldependencyontheloopconditionandisthereforelinkedto Let size(def) represent the average size of the data that is as- theloopcondition. signedbystatementdef. Then,givenanaveragenetworklatency Theprecisionoftheseanalysescanaffectthequalityofthepar- LATandabandwidthBW,thepartitionerassignsweightstoedges titions found by Pyxis and therefore performance. To preserve eandnodessasfollows: soundness, the analysis is conservative, which means that some • Controledgee:LAT·cnt(e) dependenciesidentifiedbytheanalysismaynotbenecessary. Un- necessarydependenciesresultininaccuraciesinthecostmodeland size(src) superfluous data transfers at run time. For this work we used a • Dataedgee: ·cnt(e) BW “2full+1H”object-sensitiveanalysisasdescribedin[29]. size(src) Dependencies. Usingtheseanalyses,thepartitionerbuildsthepar- • Updateedgee: ·cnt(dst) BW titiongraph,whichrepresentsinformationabouttheprogram’sde- pendencies. Thepartitiongraphcontainsnodesforeachstatement • Statementnodes:cnt(s) intheprogramandedgesforthedependenciesbetweenthem. Inthepartitiongraph,eachstatementandfieldintheprogramis • Fieldnode:0 representedbyanodeinthegraph. Dependenciesbetweenstate- Notethatsomeoftheseweights,suchasthoseoncontrolanddata mentsandfieldsarerepresentedbyedges,andedgeshaveweights edges, representtimes. Thepartitioner’sobjectiveistominimize thatmodelthecostofsatisfyingthosedependencies. Edgesrepre- thesumoftheweightsofedgescutbyagivenpartitioning. The sentdifferentkindsofdependenciesbetweenstatements: weight on statement nodes is used separately to enforce the con- • A control edge indicates a control dependency between two straintthatthetotalCPUloadontheserverdoesnotexceedagiven nodesinwhichthecomputationatthesourcenodeinfluences maximumvalue. whetherthestatementatthedestinationnodeisexecuted. The formula for data edges charges for bandwidth but not la- • A data edge indicates a data dependency in which a value tency.Forallbutextremelylargeobjects,theweightsofdataedges assigned in the source statement influences the computation per- arethereforemuchsmallerthantheweightsofcontroledges. By formedatthedestinationstatement. Inthiscasethesourcestate- setting weights this way, we leverage an important aspect of the mentisadefinition(ordef)andthedestinationisause. Pyxis runtime: satisfying a data dependency does not necessar- • Anupdateedgerepresentsanupdatetotheheapandconnects ilyrequireseparatecommunicationwiththeremotehost. Asde- fielddeclarationstostatementsthatupdatethem. scribed in Sec. 3.2, PyxIL programs maintain consistency of the Partofthepartitiongraphforourrunningexampleisshownin heap by batching updates and sending them on control transfers. Fig.4. Eachnodeinthegraphislabeledwiththecorresponding Controldependenciesbetweenstatementsondifferentpartitionsin- linenumberfromFig.2.Note,forexample,thatwhilelines20–22 herentlyrequirecommunicationinordertotransfercontrol. How- appearsequentiallyintheprogramtext,thepartitiongraphshows ever,sinceupdatestodatacanbepiggy-backedonacontroltrans- thattheselinescanbesafelyexecutedinanyorder,aslongasthey fer,themarginalcosttosatisfyadatadependencyisproportional followline19. Thepartitioneraddsadditionaledges(notshown) to the size of the data. For most networks, bandwidth delay is foroutputdependencies(write-after-write)andanti-dependencies much smaller than propagation delay, so reducing the number of (read-before-write),buttheseedgesarecurrentlyonlyusedduring messagesapartitionrequireswillreducetheaveragelatencyeven codegenerationanddonotaffectthechoiceofnodeplacements. thoughthesizeofmessagesincreases. Furthermore,byencoding ForeachJDBCcallthatinteractswiththedatabase,wealsoinsert thispropertyofdatadependenciesasweightsinthepartitiongraph, controledgestonodesrepresenting“databasecode.” we influence the choice of partition made by the solver; cutting Edgeweights. Thetradeoffbetweennetworkoverheadandserver controledgesisusuallymoreexpensivethancuttingdataedges. loadisrepresentedbyweightsonnodesandedges.Eachstatement Oursimplecostmodeldoesnotalwaysaccuratelyestimatethe nodeassignedtothedatabaseresultsinadditionalestimatedserver cost of control transfers. For example, a series of statements in loadinproportiontotheexecutioncountofthatstatement. Like- ablockmayhavemanycontroldependenciestocodeoutsidethe wise,eachdependencythatconnectstwostatements(orastatement block. Cutting all these edges could be achieved with as few as andafield)onseparatepartitionsincursestimatednetworklatency onecontroltransferatruntime,butinthecostmodel,eachcutedge X Finally,thepartitionergeneratesaPyxILprogramfromthepar- Minimize: e ·w i i titiongraph.Foreachfieldandstatement,thecodegeneratoremits ei∈Edges a placement annotation :APP: or :DB: according to the solution returnedbythesolver. Foreachdependencyedgebetweenremote nj−nk−ei ≤0 statements,thecodegeneratorplacesaheapsynchronizationoper- Subjectto: n −n −e ≤0 ationafterthesourcestatementtoensuretheremoteheapisupto k j i datewhenthedestinationstatementisexecuted. Synchronization . .. operationsarealwaysplacedafterstatementsthatupdateremotely X partitionedfieldsorarrays. n ·w ≤Budget i i 4.4 StatementReordering ni∈Nodes A partition graph may generate several valid PyxIL programs Figure5:Partitioningproblem that havethe samecost under thecost modelsince the execution orderofsomestatementsinthegraphisambiguous. Toeliminate contributesitsweight,leadingtooverestimationofthetotalparti- unnecessarycontroltransfersinthegeneratedPyxILprogram,the tioningcost.Also,fluctuationsinnetworklatencyandCPUutiliza- codegeneratorperformsareorderingoptimizationtocreatelarger tioncouldalsoleadtoinaccurateaverageestimatesandthusresult contiguousblockswiththesameplacement,reducingcontroltrans- insuboptimalpartitions. Weleavemoreaccuratecostestimatesto fers. Because it captures all dependencies, the partition graph is futurework. particularlywellsuitedtothistransformation. Infact,PDGshave 4.3 OptimizationUsingIntegerProgramming beenappliedtosimilarproblemssuchasvectorizingprogramstate- ments[14].Thereorderingalgorithmissimple.Recallthatinaddi- The weighted graph is then used to construct a Binary Integer tiontocontrol,data,andupdateedges,thepartitionerincludesad- Programmingproblem[31].Foreachnodewecreateabinaryvari- ditional(unweighted)edgesforoutputdependencies(forordering ablen∈Nodesthathasvalue0ifitispartitionedtotheapplication writes)andanti-dependencies(fororderingreadsbeforewrites)in serverand1ifitispartitionedtothedatabase. Foreachedgewe thepartitiongraph.Wecanusefullyreorderthestatementsofeach createavariablee ∈ Edgesthatis0ifitconnectsnodesassigned blockwithoutchangingthesemanticsoftheprogram[14]bytopo- to the same partition and 1 if it is cut; that is, the edge connects logicallysortingthepartitiongraphwhileignoringback-edgesand nodes on different partitions. This problem formulation seeks to interproceduraledges4. minimizenetworklatencysubjecttoaspecifiedbudgetofinstruc- Thetopologicalsortisimplementedasabreadth-firsttraversal tionsthatmaybeexecutedontheserver. Ingeneral,theproblem overthepartitiongraph. Whereasatypicalbreadth-firsttraversal hastheformshowninFigure5.Theobjectivefunctionisthesum- woulduseasingleFIFOqueuetokeeptrackofnodesnotyetvis- mationofedgevariablese multipliedbytheirrespectiveweights, i ited, the reordering algorithm uses two queues, one for DB state- w . Foreachedgewegeneratetwoconstraintsthatforcetheedge i mentsandoneforAPPstatements. Nodesaredequeuedfromone variablee toequal1iftheedgeiscut. Notethatforbothofthese i queueuntilitisexhausted,generatingasequenceofstatementsthat constraintstohold,ifn (cid:54)= n thene = 1,andifn = n then j k i j k arealllocatedinonepartition. Thenthealgorithmswitchestothe e =0.ThefinalconstraintinFigure5ensuresthatthesummation i otherqueueandstartsgeneratingstatementsfortheotherpartition. ofnodevariablesn ,multipliedbytheirrespectiveweightsw ,is i i Thisprocessalternatesuntilallnodeshavebeenvisited. atmostthe“budget”giventothepartitioner.Thisconstraintlimits theloadassignedtothedatabaseserver. 4.5 InsertionofSynchronizationStatements InadditiontotheconstraintsshowninFigure5,weaddplace- Thecodegeneratorisalsoresponsibleforplacingheapsynchro- mentconstraintsthatpincertainnodestotheserverortheclient. nizationstatementstoensureconsistencyofthePyxisdistributed For instance, the “database code” node used to model the JDBC heap. Wheneveranodehasoutgoingdataedges,thecodegener- driver’s interaction with the database must always be assigned to atoremitsasendAPPorsendDBdependingonwheretheupdated the database, and similarly assign code that prints on the user’s dataispartitioned.Atthenextcontroltransfer,thedataforallsuch consoletotheapplicationserver. objectssorecordedissenttoupdateoftheremoteheap. The placement constraints for JDBC API calls are more inter- Heap synchronization is conservative. A data edge represents esting.SincetheJDBCdrivermaintainsunserializablenativestate a definition that may reach a field or array access. Eagerly syn- regardingtheconnection,preparedstatements,andresultsetsused chronizing each data edge ensures that all heap locations will be by the program, all API calls must occur on the same partition. up to date when they are accessed. However, imprecision in the Whilethesecallscouldalsobepinnedtothedatabase,thiscouldre- reaching definitions analysis is unavoidable, since predicting fu- sultinlow-qualitypartitionsifthepartitionerhasverylittlebudget. tureaccessesisundecidable. Therefore, eagersynchronizationis Consider an extreme case where the partitioner is given a budget sometimes wasteful and may result in unnecessary latency from of 0. Ideally, it should create a partition equivalent to the origi- transferringupdatesthatareneverused. nalprogramwhereallstatementsareexecutedontheapplication ThePyxisruntimesystemalsosupportslazysynchronizationin server. Fortunately, this behavior is easily encoded in our model whichobjectsarefetchedfromtheremoteheapatthepointofuse. byassigningthesamenodevariabletoallstatementsthatcontain Ifauseofanobjectperformsanexplicitfetch,dataedgestothat a JDBC call and subsequently solving for the values of the node use can be ignored when generating data synchronization. Lazy variables. Thisencodingforcestheresultingpartitiontoassignall synchronizationmakessenseforlargeobjectsandforusesininfre- suchcallstothesamepartition. quentlyexecutedcode. InthecurrentPyxisimplementation,lazy Afterinstantiatingthepartitioningproblem,weinvokethesolver. synchronizationisnotusedinnormalcircumstances. Weleavea If the solver returns with a solution, we apply it to the partition hybrideager/lazysynchronizationschemetofuturework. graphbyassigningallnodesalocationandmarkingalledgesthat are cut. Our implementation currently supports lpsolve [20] and 4Side-effectsanddatadependenciesduetocallsaresummarizedat GurobiOptimizer[16]. thecallsite. 1 public class Order { codewithoutanexplicitmainmethodcanbepartitioned,suchasa 2 ObjectId oid; servletwhosemethodsareinvokedbytheapplicationserver. 3 class Order_app { int id; ObjectId realCostsId; } Tousethisfeature,thedeveloperindicatestheentrypointswithin 4 class Order_db { double totalCost; } thesourcecodetobepartitioned,i.e.,methodsthatthedeveloper 5 ... 6 } exposestoinvocationsfromnon-partitionedcode.ThePyxILcom- Figure6:PartitioningfieldsintoAPPandDBobjects pilerautomaticallygeneratesawrapperforeachentrypoint,such astheoneshowninFig.8,thatdoesthenecessarystacksetupand 5. PYXILCOMPILER teardowntointerfacewiththePyxisruntime. ThePyxILcompilertranslatesPyxILsourcecodeintotwopar- 6. PYXISRUNTIMESYSTEM titioned Java programs that together implement the semantics of the original application when executed on the Pyxis runtime. To ThePyxisruntimeexecutesthecompiledPyxILprogram. The illustrate this process, we give an abridged version of the com- runtimeisaJavaprogramthatrunsinunmodifiedJVMsoneach piled Order class from the running example. Fig. 6 shows how server. In this section we describe its operation and how control OrderobjectsaresplitintotwoobjectsofclassesOrder appand transferandheapsynchronizationisimplemented. Order db, containing the fields assigned to APP and DB respec- 6.1 GeneralOperations tivelyinFig.3. Bothclassescontainafieldoid(line2)whichis usedtoidentifytheobjectinthePyxis-managedheaps(denotedby Theruntimemaintainstheprogramstackanddistributedheap. DBHeapandAPPHeap). EachexecutionblockdiscussedinSec.5.1isimplementedasaJava classwithacallmethodthatimplementstheprogramlogicofthe 5.1 RepresentingExecutionBlocks givenblock.Whenexecutionstartsfromanyoftheentrypoints,the In order to arbitrarily assign program statements to either the runtimeinvokesthecallmethodontheblockthatwaspassedin applicationorthedatabaseserver,theruntimeneedstohavecom- fromtheentrypointwrapper. Eachcallmethodreturnsthenext pletecontroloverprogramcontrolflowasittransfersbetweenthe block for the runtime to execute next, and this process continues servers. PyxisaccomplishesthisbycompilingeachPyxILmethod onthelocalruntimeuntilitencountersanexecutionblockthatis intoasetofexecutionblocks,eachofwhichcorrespondstoastraight- assigned to the remote runtime. When that happens, the runtime linePyxILcodefragment.Forexample,Fig.7showsthecodegen- sendsacontroltransfermessagetotheremoteruntimeandwaits eratedforthemethodcomputeTotalCostintheclass. Thiscode until the remote runtime returns control, informing it of the next includesexecutionblocksforboththeAPPandDBpartitions.Note executionblocktorunonitsside. thatlocalvariablesinthePyxILsourcearetranslatedintoindices Pyxisdoesnotcurrentlysupportthreadinstantiationorshared- of an array stack that is explicitly maintained in the Java code memory multithreading, but a multithreaded application can first andisusedtomodelthestackframethatthemethodiscurrently instantiatethreadsoutsideofPyxisandthenhavePyxismanagethe operatingon. codetobeexecutedbyeachofthethreads. Additionally,thecur- Thekeytomanagingcontrolflowisthateachexecutionblock rentimplementationdoesnotsupportexceptionpropagationacross endsbyreturningtheidentifierofthenextexecutionblock. This servers,butextendingsupportinthefutureshouldnotrequiresub- styleofcodegenerationissimilartothatusedbytheSML/NJcom- stantialengineeringeffort. piler [30] to implement continuations; indeed, code generated by 6.2 ProgramStateSynchronization thePyxILcompilerisessentiallyincontinuation-passingstyle[13]. Forinstance,inFig.7,executionofcomputeTotalCoststarts Whenacontroltransferhappens,thelocalruntimeneedstocom- with block computeTotalCost0 at line 1. After creating a new municate with the remote runtime about any changes to the pro- stackframeandpassingintheobjectIDofthereceiver,theblock gram state (i.e., changes to the stack or program heap). Stack askstheruntimetoexecutegetCosts0withcomputeTotalCost1 changes are always sent along with the control transfer message recordedasthereturnaddress. Theruntimethenexecutestheex- and as such are not explicitly encoded in the PyxIL code. How- ecutionblocksassociatedwithgetCosts(). WhengetCosts() ever,requeststosynchronizetheheapsareexplicitlyembeddedin returns,itjumpstocomputeTotalCost1tocontinuethemethod, thePyxILcode, allowingthepartitionertomakeintelligentdeci- wheretheresultofthecallispoppedintostack[2]. sionsaboutwhatmodifiedobjectsneedtobesentandwhen. As Next,controlistransferredtothedatabaseserveronline14,be- mentioned in Sec. 4.5, Pyxis includes two heap synchronization causeblockcomputeTotalCost1returnstheidentifierofablock routines:sendDBandsendAPP,dependingonwhichportionofthe thatisassignedtothedatabaseserver(computeTotalCost2).This heap is to be sent. In the implementation, the heap objects to be implements the transition in Fig. 3 from (APP) line 21 to (DB) sent are simply piggy-backed onto the control transfer messages line22.ExecutionthencontinueswithcomputeTotalCost3,which (just like stack updates) to avoid initiating more round trips. We implementstheevaluationoftheloopconditioninFig.3. measuretheoverheadofheapsynchronizationanddiscussthere- Thisexampleshowshowtheuseofexecutionblocksgivesthe sultsinSec.7.3. Pyxispartitionercompletefreedomtoplaceeachpieceofcodeand 6.3 SelectingaPartitioningDynamically datatotheservers. Furthermore,becausetheruntimeregainscon- trolaftereveryexecutionblock,ithastheabilitytoperformother Theruntimealsosupportsdynamicallychoosingbetweenparti- tasks between execution blocks or while waiting for the remote tioningswithdifferentCPUbudgetsbasedonthecurrentloadon server to finish its part of the computation such as garbage col- the database server. It uses a feedback-based approach in which lectiononlocalheapobjects. thePyxisruntimeonthedatabaseserverperiodicallypollstheCPU utilizationontheserverandcommunicatesthatinformationtothe 5.2 InteroperabilitywithExistingModules applicationserver’sruntime. Pyxisdoesnotrequirethatthewholeapplicationbepartitioned. At each time t when a load message arrives with server load This is useful, for instance, if the code to be partitioned is a li- S , the application server computes a weighted moving average t braryusedbyother,non-partitionedcode. Anotherbenefitisthat (EWMA) of the load, L = αL + (1 − α)S . Depending t t−1 t stack locations for computeTotalCost: 15 computeTotalCost2: stack[0] = oid 16 stack[5] = 0; // i = 0 stack[1] = dct 17 return computeTotalCost3; // start the loop stack[2] = object ID for costs 18 stack[3] = costs.length 19 computeTotalCost3: stack[4] = i 20 if (stack[5] < stack[3]) // loop index < costs.length stack[5] = loop index 21 return computeTotalCost4; // loop body stack[6] = realCost 22 else return computeTotalCost5; // loop exit 23 24 computeTotalCost4: 1 computeTotalCost0: 25 itemCost = DBHeap[stack[2]][stack[5]]; 2 pushStackFrame(stack[0]); 26 stack[6] = itemCost * stack[1]; 3 setReturnPC(computeTotalCost1); 27 oid = stack[0]; 4 return getCosts0; // call this.getCosts() 28 DBHeap[oid].totalCost += stack[6]; 5 29 sendDB(oid); 6 computeTotalCost1: 30 APPHeap[oid].realCosts[stack[4]++] = stack[6]; 7 stack[2] = popStack(); 31 sendNative(APPHeap[oid].realCosts); 8 stack[3] = APPHeap[stack[2]].length; 32 ++stack[5]; 9 oid = stack[0]; 33 pushStackFrame(APPHeap[oid].id, stack[6]); 10 APPHeap[oid].realCosts = nativeObj(new dbl[stack[3]); 34 setReturnPC(computeTotalCost3); 11 sendAPP(oid); 35 return insertNewLineItem0; // call insertNewLineItem 12 sendNative(APPHeap[oid].realCosts, stack[2]); 36 13 stack[4] = 0; 37 computeTotalCost5: 14 return computeTotalCost2; // control transfer to DB 38 return returnPC; Figure7: Runningexample: APPcode(left)andDBcode(right). Forsimplicity,executionblocksarepresentedasasequenceof statementsprecededbyalabel. public class Order { withGurobiandlpsolveasthelinearprogramsolvers. Theexper- ... iments used mysql 5.5.7 as the DBMS with buffer pool size set public void computeTotalCost(double dct) { to1GB,hostedonamachinewith162.4GHzcoresand24GBof pushStackFrame(oid, dct); physicalRAM.WeusedApacheTomcat6.0.35asthewebserver execute(computeTotalCost0); popStackFrame(); for TPC-W, hosted on a machine with eight 2.6GHz cores and return; // no return value 33GBofphysicalRAM.Thedisksonbothserversarestandardse- } rialATAdisks. Thetwoserversarephysicallylocatedinthesame } datacenterandhaveapingroundtriptimeof2ms. Allreported Figure8:WrapperforinterfacingwithregularJava performanceresultsaretheaverageofthreeexperimentalruns. For TPC-C and TPC-W experiments below, we implemented on the value of L , the application server’s runtime dynamically threedifferentversionsofeachbenchmarkandmeasuredtheirper- t chooseswhichpartitiontoexecuteateachoftheentrypoints. For formanceasfollows: instance,ifL ishigh(i.e.,thedatabaseserveriscurrentlyloaded), • JDBC:Thisisastandardimplementationofeachbenchmark t thentheapplicationserver’sruntimewillchooseapartitioningthat whereprogramlogicresidescompletelyontheapplicationserver. was generated using a low CPU budget until the next load mes- Theprogramrunningontheapplicationserverconnectstothere- sage arrives. Otherwise the runtime uses a partitioning that was moteDBMSusingJDBCandmakesrequeststofetchorwriteback generatedwithahigherCPU-budgetsinceL indicatesthatCPU datatotheDBMSusingtheobtainedconnection. Aroundtripis t resourcesareavailableonthedatabaseserver. TheuseofEWMA incurredforeachdatabaseoperationbetweenthetwoservers. here prevents oscillations from one deployment mode to another. • Manual:Thisisanimplementationofthebenchmarkswhere In our experiments with TPC-C (Sec. 7.1.3), we used two differ- alltheprogramlogicismanuallysplitintotwohalves:the“database entpartitionsandsetthethresholdbetweenthemtobe40%(i.e., program,”whichresidesontheJVMrunningonthedatabaseserver, ifL > 40thentheruntimeusesalowerCPU-budgetpartition). and the “application program,” which resides on the application t Loadmessagesweresentevery10secondswithαsetto0.2. The server. The application program is simply a wrapper that issues valuesweredeterminedafterrepeatexperimentation. RPCcallsviaJavaRMItothedatabaseprogramforeachtypeof Thissimple, dynamicapproachworkswhenseparateclientre- transaction,passingalongwithittheargumentstoeachtransaction questsarehandledcompletelyindependentlyattheapplicationserver, type. Thedatabaseprogramexecutestheactualprogramlogicas- sincethetwoinstancesoftheprogramdonotshareanystate.This sociatedwitheachtypeoftransactionandopenslocalconnections scenario is likely in many server settings; e.g., in a typical web totheDBMSforthedatabaseoperations. Thefinalresultsarere- server,eachclientiscompletelyindependentofotherclients.Gen- turnedtotheapplicationprogram. Thisistheexactoppositefrom eralizingthisapproachsothatrequestssharingstatecanutilizethis the JDBC implementation with all program logic residing on the adaptationfeatureisfuturework. databaseserver. Here,eachtransactiononlyincursoneroundtrip. For TPC-C we also implemented a version that implements each 7. EXPERIMENTS transactionasaMySQLuser-definedfunctionratherthanissuing JDBCcallsfromaJavaprogramonthedatabaseserver. Wefound Inthissectionwereportexperimentalresults. Thegoalsofthe thatthisdidnotsignificantlyimpacttheperformanceresults. experiments are: to evaluate Pyxis’s ability to generate partitions • Pyxis:Toobtaininstructioncounts,wefirstprofiledtheJDBC ofanapplicationunderdifferentserverloadsandinputworkloads; implementationunderdifferenttargetthroughputratesforafixed andtomeasuretheperformanceofthosepartitioningsaswellasthe periodoftime.WethenaskedPyxistogeneratedifferentpartitions overheadofperformingcontroltransfersbetweenruntimes. Pyxis withdifferentCPUbudgets.Wedeployedthetwopartitionsonthe isimplementedinJavausingthePolyglotcompilerframework[24] application and database servers using Pyxis and measured their or a database server serving load on behalf of multiple applica- performance. tions or tenants. The resulting latencies are shown in Fig. 10(a) withCPUandnetworkutilizationshowninFig.10(b)and(c).The 7.1 TPC-CExperiments ManualimplementationhaslowerlatenciesthanPyxisandJDBC whenthethroughputrateislow,butforhigherthroughputvalues InthefirstsetofexperimentsweimplementedtheTPC-Cwork- theJDBCandPyxisimplementationsoutperformsManual. With loadinJava.Ourimplementationissimilartoan“official”TPC-C limited CPUs, the Manual implementation uses up all available implementationbutdoesnotincludeclientthinktime.Thedatabase CPUswhenthetargetthroughputissufficientlyhigh. Incontrast, contains data from 20 warehouses (initial size of the database is allpartitionsproducedbyPyxisfordifferenttargetthroughputval- 23GB),andfortheexperimentsweinstantiated20clientsissuing uesresembletheJDBCimplementationinwhichmostofthepro- newordertransactionssimultaneouslyfromtheapplicationserver gramlogicisassignedtotheapplicationserver.Thisconfiguration tothedatabaseserverwith10%transactionsrolledback.Wevaried enablesthePyxisimplementationtosustainhighertargetthrough- therateatwhichtheclientsissuedthetransactionsandthenmea- putsanddeliverlowerlatencywhenthedatabaseserverexperiences suredtheresultingsystem’sthroughputandaveragelatencyofthe highload.TheresultingnetworkandCPUutilizationaresimilarto transactionsfor10minutes. thoseofJDBCaswell. 7.1.1 FullCPUSetting 7.1.3 DynamicallySwitchingPartitions InthefirstexperimentweallowedtheDBMSandJVMonthe databaseservertouseall16coresonthemachineandgavePyxis Inthefinalexperimentweenabledthedynamicpartitioningfea- alargeCPUbudget.Fig.9(a)showsthethroughputversuslatency. tureintheruntimeasdescribedinSec.6.3. Thetwopartitionings Fig.9(b)and(c)showtheCPUandnetworkutilizationunderdif- used in this case were the same as the partitionings used in the ferentthroughputs. previoustwoexperiments(i.e.,onethatresemblesManualandan- Theresultsillustrateseveralpoints.First,theManualimplemen- otherthatresemblesJDBC).Inthisexperiment,however,wefixed tationwasabletoscalebetterthanJDBCbothintermsofachieving the target throughput to be 500 transactions / second for 10 min- lowerlatencies,andbeingabletoprocessmoretransactionswithin utessinceallimplementationswereabletosustainthatamountof the measurement period (i.e., achieve a high overall throughput). throughput. Afterthreeminuteselapsedweloadedupmostofthe ThehigherthroughputintheManualimplementationisexpected CPUsonthedatabasetosimulatetheeffectoflimitedCPUs. The sinceeachtransactiontakeslesstimetoprocessduetofewerround average latencies were then measured during each 30-second pe- tripsandincurslesslockcontentionintheDBMSduetolocksbe- riod,asshowninFig.11. ForthePyxisimplementation,wealso ingheldforlesstime. measuredtheproportionoftransactionsexecutedusingtheJDBC- ForPyxis,theresultingpartitionsforalltargetthroughputswere like partitioning within each 1-minute period. Those are plotted very similar to the Manual implementation. Using the provided nexttoeachdatapoint. CPUbudget,Pyxisassignedmostoftheprogramlogictobeexe- As expected, the Manual implementation had lower latencies cutedonthedatabaseserver. Thisisthedesiredresult;sincethere when the server was not loaded. For JDBC the latencies remain areCPUresourcesavailableonthedatabaseserver, itisadvanta- constantasitdoesnotuseallavailableCPUsevenwhentheserver geoustopushasmuchcomputationtoitaspossibletoachievemax- is loaded. When the server is unloaded, however, it had higher imumreductioninthenumberofroundtripsbetweentheservers. latency compared to the Manual implementation. The Pyxis im- ThedifferenceinperformancebetweenthePyxisandManualim- plementation,ontheotherhand,wasabletotakeadvantageofthe plementations is negligible (within the margin of error of the ex- twoimplementationswithautomaticswitching. Intheidealcase, periments due to variation in the TPC-C benchmark’s randomly Pyxis’slatencies shouldbethe minimumof theothertwo imple- generatedtransactions). mentationsatalltimes.However,duetotheuseofEWMA,ittook TherearesomedifferencesintheoperationsoftheManualand ashortperiodoftimeforPyxistoadapttoloadchanges,althoughit Pyxisimplementations,however. Forinstance,intheManualim- eventuallysettledtoanall-application(JDBC-like)deploymentas plementation, only the method arguments and return values are shownbytheproportionnumbers. Thisexperimentillustratesthat communicatedbetweenthetwoserverswhereasthePyxisimple- evenifthedeveloperwasnotabletopredicttheamountofavail- mentationalsoneedstotransmitchangestotheprogramstackand ableCPUresources, Pyxiscangeneratedifferentpartitionsunder heap. This can be seen from the network utilization measures in various budgets and automatically choose the best one given the Fig.9(c),whichshowthatthePyxisimplementationtransmitsmore actualresourceavailability. datacomparedtotheManualimplementationduetosynchroniza- tionoftheprogramstackbetweentheruntimes. Weexperimented 7.2 TPC-W withvariouswaystoreducethenumberofbytessent,suchaswith Inthenextsetofexperiments,weusedaTPC-Wimplementation compression and custom serialization, but found that they used writteninJava. Thedatabasecontained10,000items(about1GB moreCPUresourcesandincreasedlatency. However,Pyxissends on disk), and the implementation omitted the thinking time. We dataonlyduringcontroltransfers, whicharefewerthandatabase drovetheloadusing20emulatedbrowsersunderthebrowsingmix operations.Consequently,PyxissendslessdatathantheJDBCim- configuration and measured the average latencies at different tar- plementation. getWebInteractionsPerSeconds(WIPS)overa10-minuteperiod. WerepeatedtheTPC-CexperimentsbyfirstallowingtheJVMand 7.1.2 LimitedCPUSetting DBMStouseall16availablecoresonthedatabaseserverfollowed Nextwerepeatedthesameexperiment,butthistimelimitedthe bylimitingtothreecoresonly.Fig.12andFig.13showthelatency DBMSandJVM(applicableonlytoManualandPyxisimplemen- results.TheCPUandnetworkutilizationaresimilartothoseinthe tations)onthedatabaseservertouseamaximumofthreeCPUsand TPC-Cexperimentsandarenotshown. gavePyxisasmallCPUbudget. Thisexperimentwasdesignedto ComparedtotheTPC-Cresults,weseeasimilartrendinlatency. emulateprogramsrunningonahighlycontendeddatabaseserver However,sincetheprogramlogicinTPC-Wismorecomplicated

Description: