Chapter 7: One-way ANOVA - Heather Lench, Ph.D. PDF

Preview Chapter 7: One-way ANOVA - Heather Lench, Ph.D.

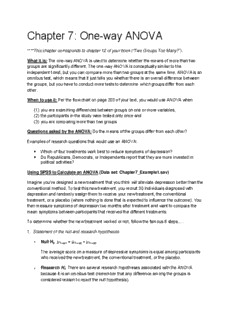

Chapter 7: One-way ANOVA ****This chapter corresponds to chapter 12 of your book (“Two Groups Too Many?”). What it is: The one-way ANOVA is used to determine whether the means of more than two groups are significantly different. The one-way ANOVA is conceptually similar to the independent t-test, but you can compare more than two groups at the same time. ANOVA is an omnibus test, which means that it just tells you whether there is an overall difference between the groups, but you have to conduct more tests to determine which groups differ from each other. When to use it: Per the flow chart on page 203 of your text, you would use ANOVA when: (1) you are examining differences between groups on one or more variables, (2) the participants in the study were tested only once and (3) you are comparing more than two groups Questions asked by the ANOVA: Do the means of the groups differ from each other? Examples of research questions that would use an ANOVA: • Which of four treatments work best to reduce symptoms of depression? • Do Republicans, Democrats, or Independents report that they are more invested in political activities? Using SPSS to Calculate an ANOVA (Data set: Chapter7_Example1.sav) Imagine you’ve designed a new treatment that you think will alleviate depression better than the conventional method. To test this new treatment, you recruit 30 individuals diagnosed with depression and randomly assign them to receive your new treatment, the conventional treatment, or a placebo (where nothing is done that is expected to influence the outcome). You then measure symptoms of depression two months after treatment and want to compare the mean symptoms between participants that received the different treatments. To determine whether the new treatment worked or not, follow the famous 8 steps…. 1. Statement of the null and research hypotheses ◦ Null H μ = μ =μ 0 : Treat1 Treat2 Treat3 The average score on a measure of depressive symptoms is equal among participants who received the new treatment, the conventional treatment, or the placebo. ◦ Research H1: There are several research hypotheses associated with the ANOVA because it is an omnibus test (remember that any difference among the groups is considered reason to reject the null hypothesis). X ≠ X ;X ≠ X ;X ≠ X Treat1 Treat2 Treat1 Treat3 Treat2 Treat3; XTreat1 ≠ XTreat2≠ XTreat3 (Just write this statement out for ease, but all of the above hypotheses are made) The average score on a measure of depressive symptoms is not equal among participants who received the new treatment, the conventional treatment, or the placebo. 2. Set the level of risk at p < .05 3. Selection of the appropriate test statistic Using the flowchart on page 203, we see that the ANOVA is the appropriate statistical test because we are comparing the means between two or more independent groups (different people in each group) that were tested once. The groups in this example are independent because they are composed of different groups of people. Open the dataset “Chapter7_example1.sav”. Take a moment to familiarize yourself with the data. Note how data for this type of analysis should be entered. 1) Each participant has one row in the data 2) The second column is used to indicate that participant’s status on the group variable (i.e., whether they received the new treatment, conventional treatment, or placebo) 3) The third column indicates each participant’s score on the dependent variable. **For our example, the scores can range from 1-10 because the depression measure includes 10 items and the score reflects a count of how many of those symptoms of depression the participant reported experiencing. The data should look something like this in SPSS: 4. Computation of the test statistic. We will use SPSS to compute the test statistic for us. To do so, click on the “Analyze” drop-down menu, highlight “General Linear Model”, and then click on “Univariate”, as pictured below. The following pop-up window will appear: Highlight the name of the dependent variable that represents the outcome measure (in this case, “depression”) and click the arrow to place it in the “dependent variable:” box. Next, highlight the name of the independent variable that designates the categorical treatment group (“treatment”) and click the arrow again to also place it in the “fixed factor(s):” box. Your screen should look like this: It’s always useful to have descriptive statistics for your analyses, so click “options” in the middle right-hand menu, and check the box for “descriptive statistics”. It should look like this: Hit “continue” to return to the main pop up window. Remember that ANOVA is an omnibus test and will only tests the overall equality of the means, not which ones differ. You can ask for post-hoc contrasts between groups though so that you’ll know which groups are different from which other groups (this also controls for the inflated Type I error associated with running multiple analyses). Click on the “post hoc” button and it will open this window: Move over the categorical variable “Treatment” to the “Post Hoc Tests for:” box. You also need to select the type of post hoc contrast that you would like to run. There are multiple options for different types of data but they are beyond the scope of what we’ll cover in this course. Click on the “Bonferroni” option. The window should look like this: Hit “continue” and click OK, and navigate to the output window to find your results. The output will look like this: Between-Subjects Factors Value Label N This box tells you how many type of 1.00 new levels are in the “treatment” treatment treatment 10 variable and how many people 2.00 conventional treatment 10 are at each level (here n = 10) 3.00 placebo 10 Descriptive Statistics This box tells you the mean Dependent Variable: depressive symptoms depressive symptoms among type of treatment Mean Std. Deviation N participants in each of the new treatment 1.6000 .69921 10 three groups, the standard conventional treatment 4.8000 .78881 10 deviation and the n. This is placebo 8.4000 1.07497 10 Total 4.9333 2.94704 30 important information to interpret any significant finding. Tests of Between-Subjects Effects Dependent Variable: depressive symptoms Type III Sum Source of Squares df Mean Square F Sig. Corrected Model 231.467(a) 2 115.733 153.176 .000 Intercept 730.133 1 730.133 966.353 .000 Treatment 231.467 2 115.733 153.176 .000 Error 20.400 27 .756 Total 982.000 30 Corrected Total 251.867 29 a R Squared = .919 (Adjusted R Squared = .913) The row corresponding to your The Sum of Squares column and Mean Square column groups is particularly important correspond to calculations during a hand calculation – here it’s the “treatment” for ANOVA. The degrees of freedom for your statistic row. You can ignore most of are listed in the “df” column in the treatment and the information in the other error rows. The F column gives you the test statistic in rows. the treatment row and the Sig. column in the treatment row gives the p‐value associated with that test statistic. Post Hoc Tests type of treatment Multiple Comparisons Dependent Variable: depressive symptoms Bonferroni Mean (I) type of Difference treatment (J) type of treatment (I-J) Std. Error Sig. 95% Confidence Interval Lower Upper Lower Upper Lower Bound Bound Bound Bound Bound new treatment conventional treatment -3.2000(*) .38873 .000 -4.1922 -2.2078 placebo -6.8000(*) .38873 .000 -7.7922 -5.8078 conventional new treatment 3.2000(*) .38873 .000 2.2078 4.1922 treatment placebo -3.6000(*) .38873 .000 -4.5922 -2.6078 placebo new treatment 6.8000(*) .38873 .000 5.8078 7.7922 conventional treatment 3.6000(*) .38873 .000 2.6078 4.5922 These post‐hoc tests constrast each group with every other group. If you look at the “Sig.” column, it gives you the p‐value associated with each contrast (there are reasons to prefer to look at the row with 95% Confidence Interval, but that is beyond the scope of this course). In this case, mean depressive symptoms of participants in every group significantly differs from every other group at p < .001. Interpreting the Output The third table you see (“Tests of Between-Subjects Effects”) contains all of the information related to whether or not the null hypothesis can be rejected. The “F” column gives the exact obtained value for the effect of “Treatment”: F = 153.18. This is the same number you would get if you calculated the F by hand using the formula from your text. Note that you could use the information from this table in SPSS to create the same F table you make when you calculate an ANOVA by hand (page 210 of your book). The “df” column gives the degrees of freedom for the ANOVA. For an ANOVA, we include both the degrees of freedom between, which is 2 in this case (from the “treatment” row; k-1 where k is the number of groups), and the degrees of freedom within, which is 27 in this case (from the “error” row; N-k, where N is the total sample size and k is the number of groups). The “Sig.” column gives the exact p-value associated with the obtained value. Here the value is .000 in the “treatment” row. This means that the obtained value of 153.73 exceeds the critical value at a level of p < .001. The fourth table you see (“Post Hoc Tests”) gives the post hoc contrasts that we requested to examine differences between groups. This applies a correction for the inflated Type I error associated with running multiple tests. You’ll see that each treatment is listed in the first column and contrasted with the treatment listed in the second column. For example, the first row gives you the contrast between the new treatment and the conventional treatment. The most relevant column for our purposes is the “Sig.” column. If you look at the first row, you’ll see that the new treatment and the conventional treatment differ from one another with a p-value of .000 (which is less than .05). You’ll notice that each comparison pair is repeated twice in the table (e.g., the first row next to “new treatment” compares the new treatment to the conventional treatment and the first row next to “conventional treatment” just flips the comparison and compares the conventional treatment to the new treatment). 5. Determination of the value needed for rejection of the null hypothesis If you look at pages 336-338 in your text, you’ll see that the critical value at degrees of freedom 2, 27 is 3.36. Remember that the critical value gives you the minimum value that is big enough to represent an overall difference between groups at p less than .05. Our value is bigger than the minimum critical value and therefore we know that it is significant. SPSS gave us the exact p-value, though, so we can skip this step. 6. Comparison of the obtained value and the critical value is made Because SPSS gives us a p-value, all we have to do now is see whether that p-value given to us (in the output) is greater or less than .05 (the level of risk we are willing to take). In this example, the output tells us that the p-value for our test is .00 (which is less than our cutoff value of .05). This means that we reject the null hypothesis. Put another way, this means there is approximately less than a 0.1% chance that we would obtain this pattern of results if the null hypothesis was true and there was no effect of the treatment on symptoms of depression. 7/8. Making a Decision Because our p-value (.000) is less than .05, this means we reject the null hypothesis. In other words, we conclude that there is a significant difference in symptoms of depression depending on the type of treatment. Because it is an omnibus test, we don’t know which groups differ from other groups. Fortunately, though, you requested post-hoc analyses and can tell that all of the groups differed from other groups at p < .001 (remember that you report a value from SPSS of .000 as p < .001). Interpretation of the Findings Now we report our results. Here’s an example of these results in a journal article: An ANOVA revealed that there were significant differences, based on the type of treatment, on depressive symptoms, F(2, 27)= 153.18, p < .05. Post-hoc analyses revealed that participants had lower depression scores after the new treatment (M = 1.60, SD = .70) compared to the conventional treatment (M = 4.80, SD = .79), p < .001, and this was less than the symptoms following the placebo treatment (M = 8.40, SD = 1.07), p < .001. For someone unfamiliar with stats, you might say: People were less depressed after the new treatment than the conventional treatment, and they were also less depressed after the conventional treatment than a placebo treatment. Practice Problem #1 for SPSS (answers in appendix) A researcher is interested in differences in happiness following three types of exercise programs. She asks 30 people, 10 people enrolled in one of each of the three programs (spinning, aerobic, weight lifting), to complete a Happiness scale after completion of the programs. Higher numbers represent more happiness. Use SPSS to enter the data below and answer the questions that follow. Treatment Happiness Spinning 7 Spinning 7 Spinning 6 Spinning 5 Spinning 4 Spinning 1 Spinning 7 Spinning 6 Spinning 5 Spinning 3 Aerobic 6 Aerobic 5 Aerobic 5 Aerobic 5 Aerobic 6 Aerobic 4 Aerobic 2 Aerobic 6 Aerobic 4 Aerobic 4 Weight Lifting 2 Weight Lifting 1 Weight Lifting 3 Weight Lifting 4 Weight Lifting 2 Weight Lifting 3 Weight Lifting 1 Weight Lifting 2 Weight Lifting 4 Weight Lifting 2 A. What is the null and research hypothesis (in both words and statistical format)? B. What is the level of risk associated with the null hypothesis? C. What is the appropriate test statistic and WHY? D. What is the obtained value for the ANOVA test and what is its associated p-value? Is the difference between the groups statistically significant? E. What do you conclude about the effect of different exercise classes on happiness? Write up your results as you would for a journal article. F. Write up your results as you would for an intelligent person who doesn’t know stats.

Description: